Ultra Messaging (Version 6.17)

[ Multi-page HTML ] | [ PDF ]

Introduction <-

This document explains design concepts and product implementation for the Ultra Messaging Dynamic Routing Option (DRO).

For policies and procedures related to Ultra Messaging Technical Support, see UM Support.

(C) Copyright 2004,2025 Informatica Inc. All Rights Reserved.

This software and documentation are provided only under a separate license agreement containing restrictions on use and disclosure. No part of this document may be reproduced or transmitted in any form, by any means (electronic, photocopying, recording or otherwise) without prior consent of Informatica LLC.

A current list of Informatica trademarks is available on the web at https://www.informatica.com/trademarks.html.

Portions of this software and/or documentation are subject to copyright held by third parties. Required third party notices are included with the product.

This software is protected by patents as detailed at https://www.informatica.com/legal/patents.html.

The information in this documentation is subject to change without notice. If you find any problems in this documentation, please report them to us in writing at Informatica LLC 2100 Seaport Blvd. Redwood City, CA 94063.

Informatica products are warranted according to the terms and conditions of the agreements under which they are provided.

INFORMATICA LLC PROVIDES THE INFORMATION IN THIS DOCUMENT "AS IS" WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING WITHOUT ANY WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND ANY WARRANTY OR CONDITION OF NON-INFRINGEMENT.

The Ultra Messaging Dynamic Routing Option (DRO) consists of a daemon named "tnwgd" that bridges disjoint Topic Resolution Domains (TRDs) by effectively forwarding control and user traffic between them. Thus, the DRO facilitates WAN routing where multicast routing capability is absent, possibly due to technical obstacles or enterprise policies.

FYI: for historical reasons, the DRO has gone by several names:

- Gateway

- tnwg = "Twenty Nine West Gateway"

- UM Router

- Dynamic Router

- DRO = Dynamic Routing Option

In the UM documentation, the term "DRO" is generally used for brevity, but sometimes various abbreviations that include "tnwg" are used.

DRO Features <-

The DRO includes the following features:

- Full bidirectional forwarding

- Multi-hop forwarding

- Mesh, loop, or alternate path DRO configurations

- Automatic rerouting around faults

- Support for wildcard receivers

- Support of Request/Response messages

- Traffic filtering on multiple criteria

- DRO resilience

- UMP persistence support

- UM transport monitoring statistics

- Web Monitoring

- MIM and UIM forwarding

The following features are not fully supported in this release of the DRO:

- Queuing, both ULB and Brokered (including brokered JMS)

- Multitransport Threads (MTT)

If you desire any of these features or any configuration or topology not presented in this document, please contact UM Support.

- Note

- The DRO is not directly supported on the OpenVMS platform. UM applications running on the OpenVMS platform, however, can use a DRO running on a different platform, such as Microsoft Windows or Linux.

DRO Architecture <-

DRO Portals <-

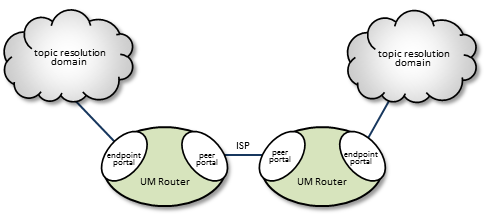

The DRO uses interfaces, called portals, through which to pass data. A DRO consists of two or more bidirectional portals that may be one of two types:

- An endpoint portal, which communicates directly to a UM topic resolution domain (TRD; see Topic Resolution Domains).

- A peer portal, which communicates via TCP with another peer portal (of another DRO), allowing tunneling between DROs. Two peer portals connected to each other are referred to as companion peers, and by default, utilize TCP connections for data and control traffic. Compression and encryption can be applied to TCP-only peer links. Optionally, latency can be reduced by adding UDP to the peer link; see UDP Peer Link.

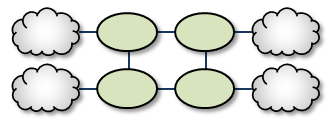

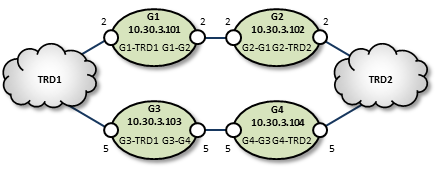

The figure below shows a simple DRO use case, where two DROs bridge an ISP to connect two TRDs using a TCP link.

You configure portals in the DRO's XML configuration file, specifying the portal's name, cost, UM Configuration, Access Control Lists and other attributes. See DRO Configuration Reference.

UDP Peer Link <-

By default, a DRO peer link uses a single TCP connection to communicate between two DROs. But TCP can introduce latency outliers and limit throughput, especially when used over a high-bandwidth, high-latency WAN link that experiences occasional packet loss. Latency and throughput can be improved by enabling the UDP peer link option.

When UDP is enabled for a peer link, the TCP peer link is still used for DRO command and control messages. Everything else, including user data, is exchanged using UDP.

To enable UDP on a peer link, use the Router Element "<udp>". At a minimum, you must configure the port number.

When configured, both the TCP and the UDP links must be operational. If either link fails to pass data, the DRO will disconnect and reconnect until both links are successful.

The UDP peer link uses the same reliable unicast protocol as the Transport LBT-RU protocol, and shares many of the same configuration options as the transport. However, unlike LBT-RU, the UDP peer link does not need to have a datagram maximum size configured. It is hard-coded to a large value (above 65,000 bytes), chosen to be sufficient to handle all valid UM fragment sizes.

Starting with UM version 6.17, the UDP Peer link supports compression and encryption. See Router Element "<compression>" and Router Element "<tls>".

Topic Resolution Domains <-

Since topic resolution uses UDP, sources and receivers must have UDP connectivity to each other. When they do, we consider them to be in the same topic resolution domain (TRD). More specifically, UM contexts must satisfy the following two requirements to belong to the same topic resolution domain.

- The contexts must use the same topic resolution UM configuration (i.e., resolver_* options are the same).

- Contexts can communicate using the protocols required for both message transport and topic resolution traffic.

For example, two contexts on separate machines in the same LAN are not in the same topic resolution domain if they use different resolver addresses. See Multicast Resolver Network Options. A topic resolution domain can span a WAN if the UM contexts on each side of a firewall use the same UM configuration and the firewall allows UDP traffic (multicast or unicast) to pass.

Each endpoint portal must identify its associated topic resolution domain with a domain-id the DRO's XML configuration file, as in the example below. All portals in the same TRD must have the same domain-id, and different TRDs networked together via DROs must have domain-ids unique to each other.

Proxy Sources and Proxy Receivers <-

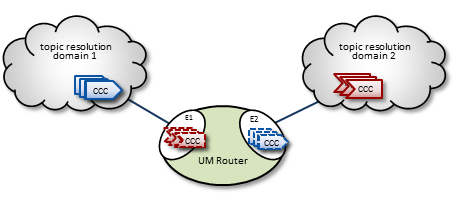

To resolve a topic across a DRO (described in Basic DRO Operation), the DRO creates, within portals, proxy sources and proxy receivers (shown in the figure below by their dashed lines). These proxies behave like their UM counterparts; they resolve topics on the TRDs like normal sources and receivers, and the DRO internally passes data from one portal to the other. However unlike regular sources, proxy sources do not have retransmission retention buffers normally used for Late Join or OTR.

Portals exist while the DRO is running, however, the DRO creates proxy sources and receivers during topic resolution and deletes them when the topic is retired.

- Note

- The proxy sources created by the DRO are unrelated to proxy sources created by the UMP persistent store.

DRO and Transport Sessions <-

When the DRO creates proxy receivers to get messages to forward, be aware that the transport sessions carrying those messages are not extended to the destination TRD. Instead, the proxy receiver simply takes the messages from the originating transport sessions and transfers them to the destination DRO's proxy sources. Those proxy sources create new transport sessions for those outgoing messages.

The proxy sources' outgoing transport sessions are unrelated to the originating sources' transport sessions. They can even use different transport types, performing a protocol conversion. In fact, a single transport session can contain multiple sources from different originating publishing applications for the same topic. Alternatively, multiple sources from the same originating publishing application which are mapped to the same originating transport session can be split into multiple transport sessions by the proxy sources in a remote TRD.

One consequence of the independence of incoming and outgoing transport sessions is that TCP flow control does not transit the DRO. A slow receiver in a remote TRD cannot "push back" on a fast source. In cases where a TCP transport session is slowed down due to one or more slow receivers, an intermediate DRO will eventually have to drop messages.

- Warning

- A single source's "source string" will be different in different TRDs connected by DROs. See Source Strings in a Routed Network for details.

Routing <-

In multiple-DRO environments where more than one DRO can provide possible messaging pathways, the DROs are able to cooperatively determine and establish optimal routes. Also, the DRO network is able to detect link or other DRO outages and automatically reroute traffic as needed. See Routing Topologies for more information.

DRO Concepts <-

Basic DRO Operation <-

The DRO's routing algorithm is said to be "interest-based". That is, subscribers express interest in topic names and/or wildcard topic patterns. The DRO network maintains lists of topics and patterns for each TRD, and routes messages accordingly.

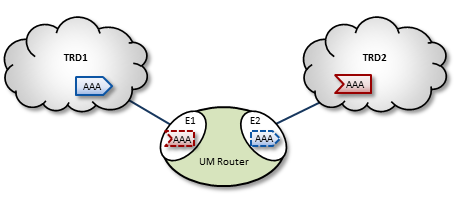

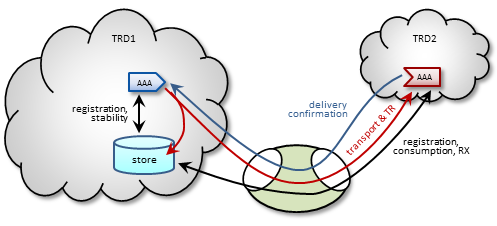

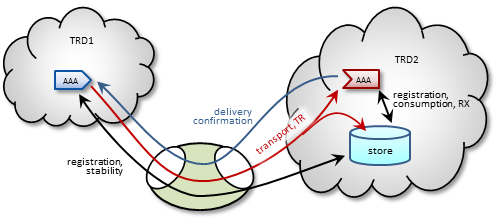

The diagram below shows a DRO bridging topic resolution domains TRD1 and TRD2, for topic AAA, in a direct link configuration. Endpoint E1 contains a proxy receiver for topic AAA and endpoint E2 has a proxy source for topic AAA.

To establish topic resolution in an already-running DRO, the following sequence typically occurs in an example like the above figure.

- A receiver in TRD2 issues a TQR (Topic Query Record) for topic AAA.

- Portal E2 receives the TQR and passes information about topic AAA to all other portals in the DRO. (In this case, E1 is the only other portal.)

- E1 immediately responds with three actions: a) create a proxy receiver for topic AAA, b) the new proxy receiver sends a TQR for AAA into TRD1, and c) E1 issues a Topic Interest message into TRD1 for the benefit of any other DROs that may be connected to that domain.

- A source for topic AAA in TRD1 sees the TQR and issues a TIR (Topic Information Record).

- E2 creates proxy source AAA, which then issues a TIR to TRD2. The receiver in TRD2 joins the transport, thus completing topic resolution.

- E1's AAA proxy receiver sees the TIR and requests that E2 (and any other interested portals in the DRO, if there were any) create a proxy source for AAA.

Interest and Topic Resolution <-

As mentioned in Basic DRO Operation, the DRO's routing algorithm is "interest-based". The DRO uses UM's Topic Resolution (TR) protocol to discover and maintain the interest tables.

For TCP-based TR, the SRS informs DROs of receiver topics and wildcard receiver patterns.

For UDP-based TR, the application's TR queries are used to inform DROs of its receiver topics and wildcard receiver patterns.

- Attention

- If using UDP-based TR, do not disable querying, as that would prevent the DRO from discovering topic and pattern interest.

Interest and Use Queries <-

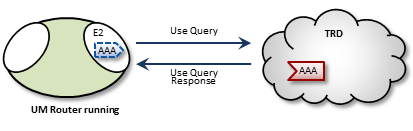

When a DRO starts, its endpoint portals issue a brief series of Topic Resolution Request messages to their respective topic resolution domains. This provokes quiescent receivers (and wildcard receivers) into sending Use Query Responses, indicating interest in various topics. Each portal then records this interest.

After a DRO has been running, endpoint portals issue periodic Topic Use Queries and Pattern Use Queries (collectively referred to as simply Use Queries). Use Query Responses from UM contexts confirm that the receivers for these topics indeed still exist, thus maintaining these topics on the interest list. Autonomous TQRs also refresh interest and have the effect of suppressing the generation of Use Queries.

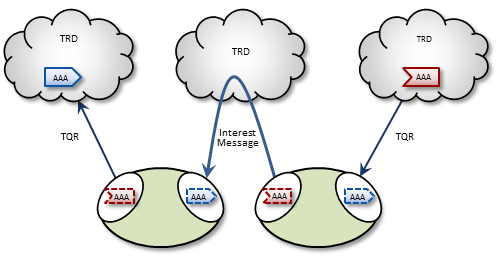

In the case of multi-hop DRO configurations, DROs cannot detect interest for remote contexts via Use Queries or TQRs. They do this instead via Interest Messages. An endpoint portal generates periodic interest messages, which are picked up by adjacent DROs (i.e., the next hop over), at which time interest is refreshed.

You can adjust intervals, limits, and durations for these topic resolution and interest mechanisms via DRO configuration options (see DRO Configuration Reference).

DRO Keepalive <-

To maintain a reliable connection, peer portals exchange DRO Keepalive signals. Keepalive intervals and connection timeouts are configurable on a per-portal basis. You can also set the DRO to send keepalives only when traffic is idle, which is the default condition. When both traffic and keepalives go silent at a portal ingress, the portal considers the connection lost and disconnects the TCP link. After the disconnect, the portal tries to reconnect. See <gateway-keepalive>.

Final Advertisements <-

DRO proxy sources on endpoint portals, when deleted, send out a series of final advertisements. A final advertisement tells any receivers, including proxy receivers on other DROs, that the particular source has gone away. This triggers EOS and clean-up activities on the receiver relative to that specific source, which causes the receiver to begin querying according to its topic resolution configuration for the sustaining phase of querying.

In short, final advertisements announce earlier detection of a source that has gone away, instead of transport timeout. This causes a faster transition to an alternative proxy source on a different DRO if there is a change in the routing path.

More About Proxy Sources and Receivers <-

The domain-id is used by Interest Messages and other internal and DRO-to-DRO traffic to ensure forwarding of all messages (payload and topic resolution) to the correct recipients. This also has the effect of not creating proxy sources/receivers where they are not needed. Thus, DROs create proxy sources and receivers based solely on receiver interest.

If more than one source sends on a given topic, the receiving portal's single proxy receiver for that topic receives all messages sent on that topic. The sending portal, however creates a proxy source for every source sending on the topic. The DRO maintains a table of proxy sources, each keyed by an Originating Transport ID (OTID), enabling the proxy receiver to forward each message to the correct proxy source. An OTID uniquely identifies a source's transport session, and is included in topic advertisements.

Protocol Conversion <-

When an application creates a source, it is configured to use one of the UM transport types. When a DRO is deployed, the proxy sources are also configured to use one of the UM transport types. Although users often use the same transport type for sources and proxy sources, this is not necessary. When different transport types are configured for source and proxy source, the DRO is performing a protocol conversion.

When this is done, it is very important to configure the transports to use the same maximum datagram size. If you don't, the DRO can drop messages which cannot be recovered through normal means. For example, a source in Topic Resolution Domain 1 might be configured for TCP, which has a default maximum datagram size of 65536. If a DRO's remote portal is configured to create LBT-RU proxy sources, that has a default maximum datagram size of 8192. If the source sends a user message of 10K, the TCP source will send it as a single fragment. The DRO will receive it and will attempt to forward it on an LBT-RU proxy source, but the 10K fragment is too large for LBT-RU's maximum datagram size, so the message will be dropped.

See Message Fragmentation and Reassembly.

The solution is to override the default maximum datagram sizes to be the same. Informatica generally does not recommend configuring UDP-based transports for datagram sizes above 8K, so it is advisable to set the maximum datagram sizes of all transport types to 8192, like this:

context transport_tcp_datagram_max_size 8192 context transport_lbtrm_datagram_max_size 8192 context transport_lbtru_datagram_max_size 8192 context transport_lbtipc_datagram_max_size 8192 source transport_lbtsmx_datagram_max_size 8192

Note that users of a kernel bypass network driver (e.g. Solarflare's Onload) frequently want to avoid all IP fragmentation, and therefore want to set their datagram max sizes to an MTU. See Datagram Max Size and Network MTU and Dynamic Fragmentation Reduction.

Configuration options: transport_tcp_datagram_max_size (context), transport_lbtrm_datagram_max_size (context), transport_lbtru_datagram_max_size (context), transport_lbtipc_datagram_max_size (context), and transport_lbtsmx_datagram_max_size (source).

Final note: the resolver_datagram_max_size (context) option also needs to be made the same in all instances of UM, including DROs.

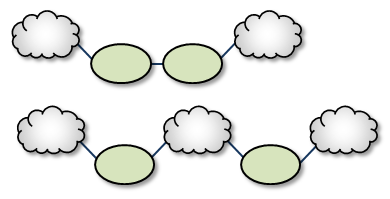

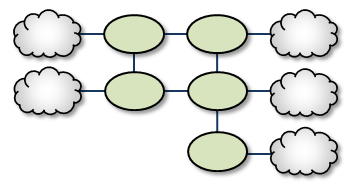

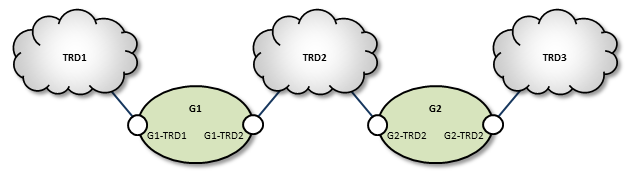

Multi-Hop Forwarding <-

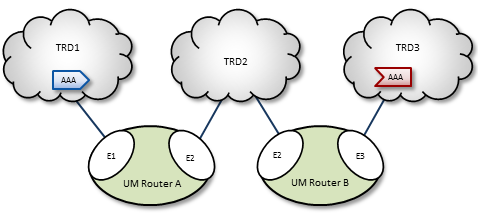

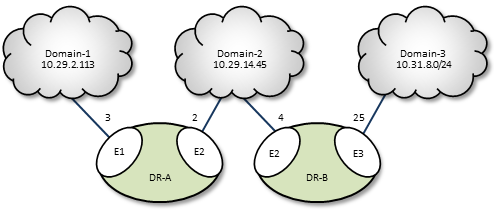

UM can resolve topics across a span of multiple DROs. Consider a simple example DRO deployment, as shown in the following figure.

In this diagram, DRO A has two endpoint portals connected to topic resolution domains TRD1 and TRD2. DRO B also has two endpoint portals, which bridge TRD2 and TRD3. Endpoint portal names reflect the topic resolution domain to which they connect. For example, DRO A endpoint E2 interfaces TRD2.

TRD1 has a source for topic AAA, and TRD3, an AAA receiver. The following sequence of events enables the forwarding of topic messages from source AAA to receiver AAA.

- Receiver AAA queries (issues a TQR).

- DRO B, endpoint E3 (B-E3) receives the TQR and passes information about topic AAA to all other portals in the DRO. In this case, B-E2 is the only other portal.

- In response, B-E2 creates a proxy receiver for AAA and sends a Topic Interest message for AAA into TRD2. The proxy receiver also issues a TQR, which in this case is ignored.

- DRO A, endpoint E2 (A-E2) receives this Topic Interest message and passes information about topic AAA to all other portals in the DRO. In this case, A-E1 is the only other portal.

- In response, A-E1 creates a proxy receiver for AAA and sends a Topic Interest message and TQR for AAA into TRD1.

- Source AAA responds to the TQR by sending a TIR for topic AAA. In this case, the Topic Interest message is ignored.

- The AAA proxy receiver created by A-E1 receives this TIR and requests that all DRO A portals with an interest in topic AAA create a proxy source for AAA.

- In response, A-E2 creates a proxy source, which sends a TIR for topic AAA via TRD2.

- The AAA proxy receiver at B-E2 receives this TIR and requests that all DRO B portals with an interest in topic AAA create a proxy source for AAA.

- In response, B-E3 creates a proxy source, which sends a TIR for topic AAA via TRD3. The receiver in TRD3 joins the transport.

- Topic AAA has now been resolved across both DROs, which forward all topic messages sent by source AAA to receiver AAA.

Routing Wildcard Receivers <-

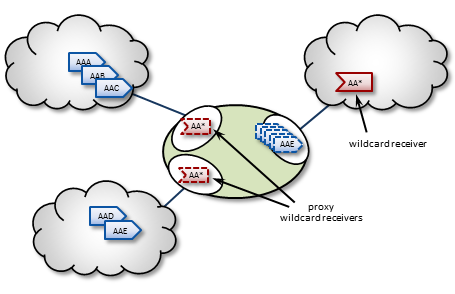

The DRO supports topic resolution for wildcard receivers in a manner very similar to non-wildcard receivers. Wildcard receivers in a TRD issuing a WC-TQR cause corresponding proxy wildcard receivers to be created in portals, as shown in the following figure. The DRO creates a single proxy source for pattern match.

Forwarding Costs <-

Forwarding a message through a DRO incurs a cost in terms of latency, network bandwidth, and CPU utilization on the DRO machine (which may in turn affect the latency of other forwarded messages). Transiting multiple DROs adds even more cumulative latency to a message. Other DRO-related factors such as portal buffering, network bandwidth, switches, etc., can also add latency.

Factors other than latency contribute to the cost of forwarding a message. Consider a message that can be sent from one domain to its destination domain over one of two paths. A three-hop path over 1Gbps links may be faster than a single-hop path over a 100Mbps link. Further, it may be the case that the 100Mbps link is more expensive or less reliable.

You assign forwarding cost values on a per-portal basis. When summed over a path, these values determine the cost of that entire path. A network of DROs uses forwarding cost as the criterion for determining the best path over which to resolve a topic.

DRO Routing <-

DROs have an awareness of other DROs in their network and how they are linked. Thus, they each maintain a topology map, which is periodically confirmed and updated. This map also includes forwarding cost information.

Using this information, the DROs can cooperate during topic resolution to determine the best (lowest cost) path over which to resolve a topic or to route control information. They do this by totaling the costs of all portals along each candidate route, then comparing the totals.

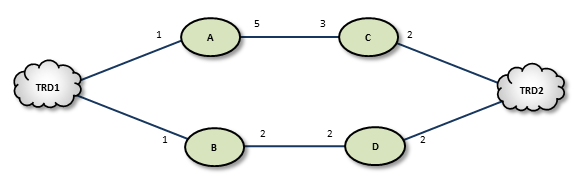

For example, the following figure shows two possible paths from TRD1 to TRD2: A-C (total route cost of 11) and B-D (total route cost of 7). In this case, the DROs select path B-D.

If a DRO or link along path B-D should fail, the DROs detect this and reroute over path A-C. Similarly, if an administrator revises cost values along path B-D to exceed a total of 12, the DROs reroute to A-C.

If the DROs find more than one path with the same lowest total cost value, i.e., a "tie", they select the path based on a node-ID selection algorithm. Since administrators do not have access to node IDs, this will appear to be a pseudo-random selection.

- Note

- You cannot configure parallel paths (such as for load balancing or Hot failover), as the DROs always select the lowest-cost path and only the lowest-cost path for all data between two points. However, you can devise an exception to this rule by configuring the destinations to be in different TRDs. For example, you can create an HFX Receiver bridging two receivers in different TRD contexts. The DROs route to both TRDs, and the HFX Receiver merges to a single stream for the application.

DRO Hotlinks <-

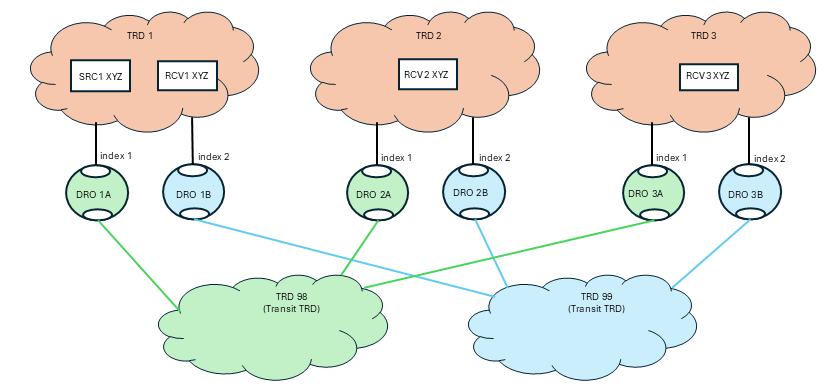

The DRO "hotlink" feature is intended for large UM deployments where multiple datacenters are interconnected by two independent global networks. The function of DRO Hotlinks is to implement a form of Hot Failover (HF) whereby two copies of each message are sent in parallel over the two global networks from a publishing datacenter to subscribing datacenters. The subscribing process will normally receive both copies of each message, but UM will deliver first one it receives, and filter the second.

The purpose for this is to provide high availability in the face of failure of the global network. It is unlikely that both global networks will fail at the same time, so if one does fail, the messages flowing over the other network will provide connectivity without the need to perform an explicit "fail over" operation (which can introduce packet loss and latency).

Hotlinks: Logical Interpretation <-

Hotlinks operate on a Topic Resolution Domain ("TRD") basis.

The primary job of the DRO is to connect TRDs together. In the above diagram, messages for topic "XYZ" published by SRC1 are received by RCV1, RCV2, and RCV3.

Let's consider RCV3. There are two possible paths to get from SRC to RCV3: transiting through TRD 98 and transiting through TRD 99. If this were a normal (not hotlinked) DRO deployment, UM would determine which path has the lowest cost and would route all messages through that path, not using the other path at all. With the hotlinks feature enabled, both DRO 1A and DRO 1B will create proxy receivers for topic XYZ and both will forward every message across the corresponding transit TRD to the destination. Once in the destination TRD 3, both copies of each message is received by the subscribing application RCV3, and UM will deliver the first one that arrives and discard the second.

To enable the hotlinks feature, you must:

- Define a hotlink index on the DRO portal used by the datacenter applications (not the redundant WAN links).

- Configure the source and receiver for "use_hotlink".

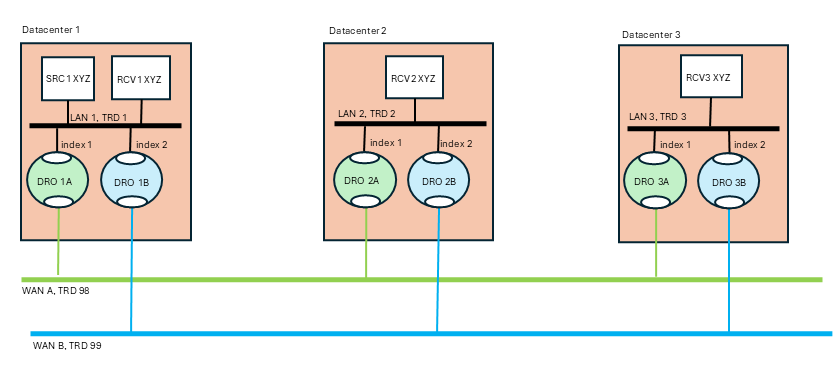

Hotlinks: Physical Interpretation <-

Normally there is no expectation of mapping between TRDs and the physical entities (networks, datacenters, hosts). The distribution of programs to TRDs is logical, not physical. However, the hotlinks feature deviates from that pattern with the expectation that TRDs map onto specific physical entities. Here is the previous logical TRD network shown in its physical embodiment:

The above diagram is a "dual hub with spokes" topology where the two WAN-based TRDs are the hubs an the data centers are the spokes. It is assumed that each data center's LAN uses LBT-RM (multicast) data transports, although this is not strictly necessary.

The publisher for topic "XYZ" (SRC1) is in datacenter 1. It sends a single message via multicast onto LAN 1. The subscriber "RCV1" will receive a copy of the message, as will the two DROs labeled "1A" and "1B". Those DROs will forward the message onto "WAN A" and "WAN B" respectively. Now you have two copies of the message. Let's follow the message into datacenter 2 via DROs "2A" and "2B". Each DRO receives its respective copy of each message and forwards it onto LAN 2. Note there are still two copies of the message on LAN 2. Finally receiver "RCV2" gets both copies of the message, and UM's "hot failover" logic delivers the first one to the application and discards the second one.

Note that the WAN TRDs can also use multicast, or can be configured for unicast-only operation. In fact, even the LANs can be used in unicast mode, although that will force the publisher "SRC1" to send the message three times, to RCV1, DRO 1A, and DRO 1B.

The benefit of hotlinks is that if WAN A fails, the receivers for XYZ will not detect any disruption or latency outliers - WAN B will continue carrying the messages. There is no "fail over" sequence. A downside of this design is that the receivers will experience twice the packet load. However, also note that the second copy of each message is discarded inside UM, so no application overhead is consumed.

The hotlinks feature is intended to be used in deployments similar to the above diagram, with DROs interconnecting multiple datacenters. It is not designed to handle redundancy within a datacenter.

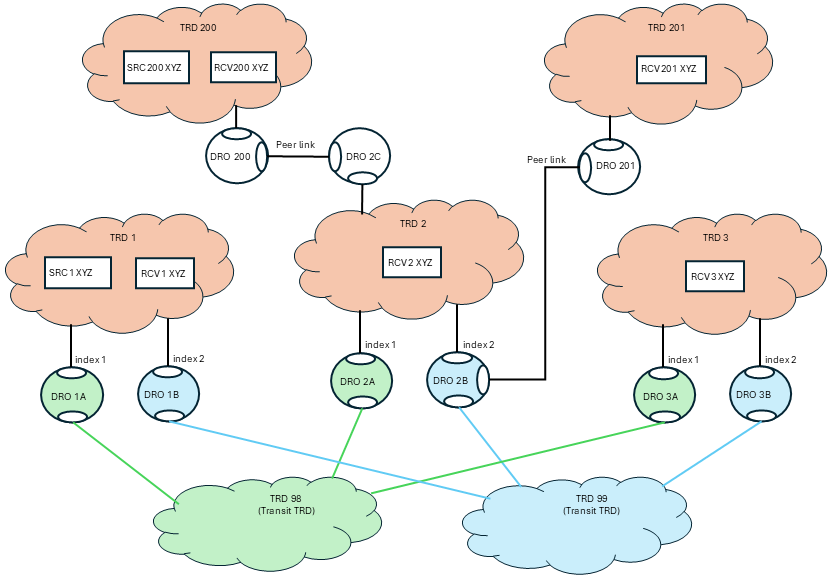

Mixing Regular and Hotlinked DROs <-

It is possible to extend the basic hub-and-spoke topology with spurs. For example, if there are two small offices near TRD 2 where the size does not justify full redundant global connectivity, TRD 2 could service those offices as TRDs 200 and 201 using non-hotlinked DROs.

There are two data flows of interest here:

- SRC200 is configured for "use_hotlink", even though TRD 200 is not hotlinked (i.e. does not have indices set). This includes the proper headers in the messages to allow hotlinked operation downstream. So as the messages flow into TRD2, they are not redundant. But they will exit TRD 2 via DRO 2A and DRO 2B, which are configured for hotlinks. So receivers in TRD 1 and TRD 3 will get have redundancy if one of the global WANs has a failure.

- SRC1 is configured for "use_hotlink", and RCV2 and RCV3 will get the full benefit of it. However, because of the way UM works, RCV200 and RCV201 will not benefit from the hotlinks. This is because DRO 200 and DRO 201 will not add the right information for RCV200 and RCV201 to join both data streams, so they will only join one. Maybe RCV200 joins the data stream sent via TRD 98 and maybe RCV201 joins the data stream sent via TRD 99 (it's not possible to predict which each will join). If the global network hosting TRD 98 fails, RCV200 will experience an outage until the DROs time out the flow, at which point RCV200 will join the other data stream. Note that RCV201 will not experience any interruption.

Note that you cannot add a redundant link between TRD2 and TRD200 to get hotlinks there also. See DRO Hotlink Restrictions item 5, "No hotlink chains".

Implementing DRO Hotlinks <-

Applications do not need special source code to make use of hotlinks. Contrast this with the Hot Failover (HF) feature that requires the use of special hot failover APIs. Hotlinks use standard source APIs (but see DRO Hotlink Restrictions), and is enabled through configuration.

DRO Hotlink Restrictions <-

-

No Hot Failover - Hotlinks is not compatible with regular Hot Failover (HF). They are intended for different use cases and may not be used in the same UM network.

-

No Smart Sources - Hotlinks is not supported by Smart Sources.

-

No XSP - Hotlinks is not supported by Transport Services Provider (XSP). We plan to add this support in a future release.

-

Locate Stores in same TRD as source - Hotlinks supports UM's Persistence feature. However, whereas a non-hotlinked DRO network allows Stores to be placed anywhere in the network, the hotlink feature adds the restriction that the Stores must be in the same TRD as the source. Note that Informatica considers this restriction to generally be the best practice except in certain limited use cases.

-

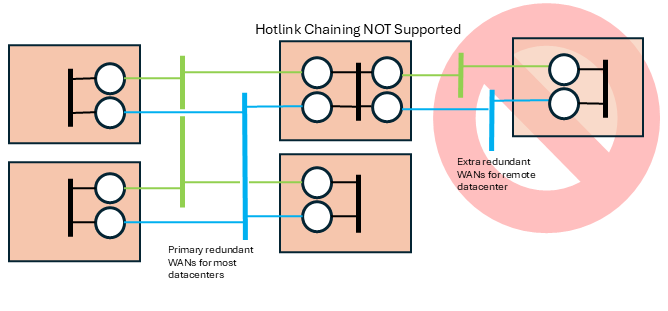

No hotlink chains - Informatica only supports a single central redundant pair of networks using the hotlinks feature. UM does not support multiple hotlinks hops (Contact UM Support for potential workarounds if this is necessary). For example, the this topology is not supported:

Routing Topologies <-

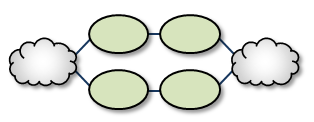

You can configure multiple DROs in a variety of topologies. Following are several examples.

Direct Link <-

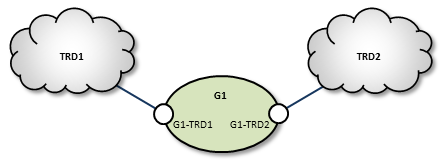

The Direct Link configuration uses a single DRO to directly connect two TRDs. For a configuration example, see Direct Link Configuration.

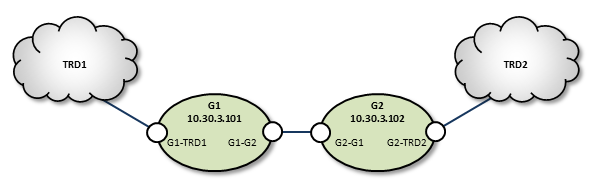

Single Link <-

A Single Link configuration connects two TRDs using a DRO on each end of an intermediate link. The intermediate link can be a "peer" link, or a transit TRD. For configuration examples, see Peer Link Configuration and Transit TRD Link Configuration.

Parallel Links <-

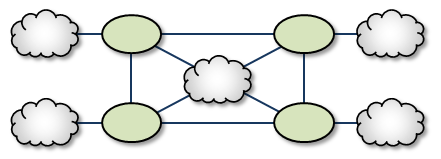

Parallel Links offer multiple complete paths between two TRDs. However, UM will not load-balance messages across both links. Rather, parallel links are used for failover purposes. You can set preference between the links by setting the primary path for the lowest cost and standby paths at higher costs. For a configuration example, see Parallel Links Configuration.

Loops <-

Loops let you route packets back to the originating DRO without reusing any paths. Also, if any peer-peer links are interrupted, the looped DROs are able to find an alternate route between any two TRDs.

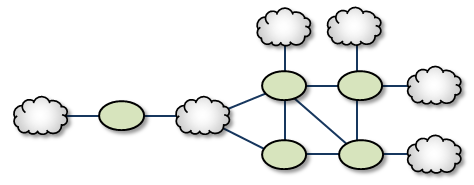

Loop and Spur <-

The Loop and Spur has a one or more DROs tangential to the loop and accessible only through a single DRO participating in the loop. For a configuration example, see Loop and Spur Configuration.

Loop with Centralized TRD <-

Adding a TRD to the center of a loop enhances its rerouting capabilities.

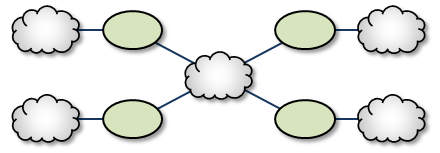

Star with centralized TRD <-

A Star with a centralized TRD does not offer rerouting capabilities but does provide an economical way to join multiple disparate TRDs.

Star with Centralized DRO <-

The Star with a centralized DRO is the simplest way to bridge multiple TRDs. For a configuration example, see Star Configuration.

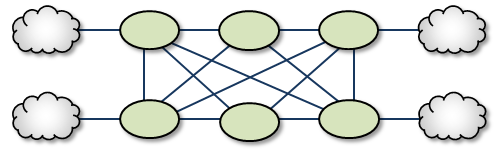

Mesh <-

The Mesh topology provides peer portal interconnects between many DROs, approaching an all-connected-to-all configuration. This provides multiple possible paths between any two TRDs in the mesh. Note that this diagram is illustrative of the ways the DROs may be interconnected, and not necessarily a practical or recommended application. For a configuration example, see Mesh Configuration.

Palm Tree <-

The Palm Tree has a set of series-connected TRDs fanning out to a more richly meshed set of TRDs. This topology tends to pass more concentrated traffic over common links for part of its transit while supporting a loop, star, or mesh near its terminus.

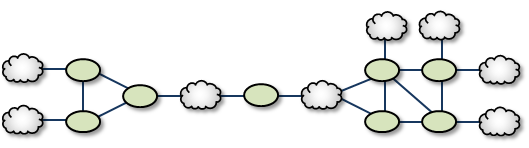

Dumbbell <-

Similar to the Palm Tree, the Dumbbell has a funneled route with a loop, star, or mesh topology on each end.

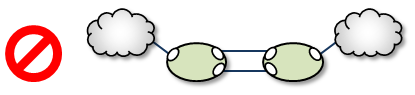

Unsupported Configurations <-

When designing DRO networks, do not use any of the following topology constructs.

Two peer-to-peer connections between the same two DROs:

Two endpoint connections from the same DRO to the same TRD:

Assigning two different Domain ID values (from different DROs) to the same TRD:

UM Feature Compatibility <-

You must install the UM Dynamic Routing Option with its companion Ultra Messaging UMS, UMP, or UMQ product, and versions must match. While most UM features are compatible with the DRO, some are not. Following is a table of features and their compatibilities with the DRO.

| UM Feature | DRO Compatible? | Notes |

|---|---|---|

| Connect and Disconnect Source Events | Yes, but see Source Connect and Disconnect Events | |

| Hot Failover (HF) | Yes | The DRO can pass messages sent by HF publishers to HF receivers, however the DRO itself cannot be configured to originate or terminate HF data streams. |

| Hot Failover Across Multiple Contexts (HFX) | Yes | |

| Late Join | Yes | |

| Message Batching | Yes | |

| Monitoring/Statistics | Yes | |

| Multicast Immediate Messaging (MIM) | Yes | |

| Off-Transport Recovery (OTR) | Yes | |

| Ordered Delivery | Yes | |

| Pre-Defined Messages (PDM) | Yes | |

| Request/Response | Yes | |

| Self Describing Messaging (SDM) | Yes | |

| Smart Sources | Partial | The DRO does not support proxy sources sending data via Smart Sources. The DRO does accept ingress traffic to proxy receivers sent by Smart Sources. |

| Source Side Filtering | Yes | The DRO supports transport source side filtering. You can activate this either at the originating TRD source, or at a downstream proxy source. |

| Source String | Yes, but see Source Strings in a Routed Network | |

| Transport Acceleration | Yes | |

| Transport LBT-IPC | Yes | |

| Transport LBT-RM | Yes | |

| Transport LBT-RU | Yes | |

| Transport LBT-SMX | Partial | The DRO does not support proxy sources sending data via LBT-SMX. Any proxy sources configured for LBT-SMX will be converted to TCP, with a log message warning of the transport change. The DRO does accept LBT-SMX ingress traffic to proxy receivers. |

| Transport TCP | Yes | |

| Transport Services Provider (XSP) | No | |

| JMS, via UMQ broker | No | |

| Spectrum | Yes | The DRO supports UM Spectrum traffic, but you cannot implement Spectrum channels in DRO proxy sources or receivers. |

| UMP Implicit and Explicit Acknowledgments | Yes | |

| UMP Persistent Store | Yes | |

| UMP Persistence Proxy Sources | Yes | |

| UMP Quorum/Consensus Store Failover | Yes | |

| UMP Managing RegIDs with Session IDs | Yes | |

| UMP RPP: Receiver-Paced Persistence (RPP) | Yes | |

| UMQ Brokered Queuing | No | |

| UMQ Ultra Load Balancing (ULB) | No | |

| Ultra Messaging Desktop Services (UMDS) | Not for client connectivity to the UMDS server | |

| Ultra Messaging Manager (UMM) | Yes | Not for DRO management |

| UM SNMP Agent | No | |

| UMCache | No | |

| UM Wildcard Receivers | Yes | |

| Zero Object Delivery (ZOD) | Yes |

DRO Implementation <-

DRO Configuration Overview <-

When the DRO daemon launches, it uses configuration option settings to determine its behavior and expectations. You specify option values in an XML configuration file, and reference the file from a command line argument.

Typically, you have a separate XML configuration file for each DRO, which contains structured configuration elements that describe aspects of the DRO. Within this XML configuration file, each endpoint portal definition points to a UM configuration file, which allow the portal to properly connect to its TRD.

Creating Applications for DRO Compatibility <-

When developing messaging applications that use Ultra Messaging and, in particular, the DRO, please observe the following guidelines.

Naming and Identification <-

An important part to successfully implementing DROs is prudent and error-free naming of TRDs, DROs, portals, etc., as well as correct identification of IP addresses and ports. It is good practice to first design the DRO network by defining all connections and uniquely naming all DROs, portals, and TRDs. This works well as a diagram similar to some examples presented in this document. Include the following names and parameters in your design diagram:

- TRD names and IDs

- DRO names

- Portal names

- Portal costs

For example, a well-prepared DRO design could look like the following figure.

Portal Costs <-

A network of DROs uses forwarding cost as the criterion for determining the best (lowest cost) path over which to resolve a topic and route data. Forwarding cost is simply the sum of all portal costs along a multi-DRO path. Thus, total cost for the single path in the above example is 34. (Note that this is a non-real-world example, since costs are pointless without alternate routes to compare to.) You assign portal costs via the <cost> configuration option.

After the DRO network calculates its paths, if a new lower-cost source becomes available, receivers switch to that path.

Access Control Lists (ACL) <-

In the DRO, an Access Control List (ACL) is a method of blocking traffic from being forwarded from one TRD to another.

Typical applications for this feature are:

- Prevent unauthorized access to sensitive messages.

- Prevent overloading of bandwidth-limited WAN links, even in the face of accidental use of overly-permissive wildcard receivers.

- ACLs can be used to limit the amount of Topic Resolution traffic for topics on TRDs that don't need those topics. However, the use of wildcard receivers can result in TR traffic even for topics which are blocked from being forwarded.

You can apply Access Control Lists to one or more of a DRO's portals to filter traffic by topic, transport, transport session, etc. You configure an ACL in a DRO's XML configuration's <acl> element, as a child of an <endpoint> or <peer> portal. As messages are processed by the DRO, the portals use the ACLs to decide whether to reject the the messages or accept them.

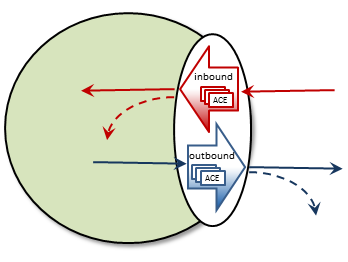

Inbound vs. Outbound

There are two types of ACLs: inbound and outbound.

An inbound ACL tests messages from a source TRD on their way into a DRO portal, and decides whether to reject or accept them. If accepted, the messages can be forwarded to the appropriate destination portal(s).

An outbound ACL tests messages on their way out of a DRO portal, and decides whether to reject them, or transmit them to the destination TRD.

This distinction becomes especially important when a DRO has more than two portals. Messages rejected inbound cannot be forwarded at all. Messages rejected outbound can allow messages to be forwarded out some portals but not others.

An ACL contains one or more Access Control Entries (ACEs).

Access Control Entry (ACE)

An ACE specifies a set of message matching criteria, and an action to perform based on successful matches. The action is either accept (the message is made available for forwarding, based on interest) or reject (the message is dropped).

When more than one ACE is supplied in an ACL, messages are tested against each ACE in the order defined until a match is found, at which point the ACE specifies what to do (reject or accept).

An ACE contains one or more conditional elements.

Conditional Elements

Conditional elements do the work of testing various characteristics of messages to determine if they should be rejected or accepted (made available for forwarding).

When more than one conditional element is supplied in an ACE, received messages are tested against all of them to determine if the ACE should be applied.

There are two classes of conditional elements:

- Topic conditionals, which test the topic string of a message.

- Transport session conditionals, which test network transport session characteristics of a message.

Topic conditionals can be included on both inbound and outbound ACLs. The topic conditionals are:

- <topic> - tests for a specific topic name of messages,

- <pcre-pattern> - matches a group of topics according to a regular expression pattern,

- <regex-pattern> - deprecated, use <pcre-pattern> instead.

Transport session conditionals only apply to inbound ACLs (they are ignored for outbound). The transport session conditionals are:

- <transport> - tests the transport type of messages.

- <source-ip> - tests the IP address of the source or proxy source of messages.

- <multicast-group> - tests the destination multicast group of LBT-RM messages.

- <udp-destination-port> - tests the destination port of LBT-RM messages.

- <udp-source-port> - tests the source port of LBT-RM and LBT-RU messages.

- <tcp-source-port> - tests the source port of TCP messages.

- <xport-id> - tests the transport ID of LBT-IPC messages.

Conditional elements are children of the <ace> element. If you place multiple conditions within an ACE, the DRO performs an "and" operation with them. That is, all relevant conditions in the ACE must be true for the ACE to be applied to a message.

A portal will silently ignore conditional elements that don't apply. For example, if a transport conditional is used on an outbound ACL, or if a UDP-based conditional is present and a TCP message is received.

Reject by Default

An implicit "reject all" is at the end of every ACL, so the DRO rejects any topic not matched by any ACE. When an ACL is configured for a portal, rejection is the default behavior.

For example, to accept and forward only messages for topic ABC and reject all others:

No "reject" ACE is needed since rejection is the default.

In contrast, to accept all messages except for topic ABC:

The second ACE is used as a "match all", which effectively changes the default behavior to "accept".

ACE Ordering

Since the behavior for multiple ACEs is to test them in the order defined, ACEs should be ordered from specific to general.

In the example below, a user named "user1" is assigned to the LAN1 TRD. It is desired to forward all non-user-specific messages, but restrict user-specific message to only that user.

By ordering the ACEs as shown, messages for USER.user1 will be forwarded by the first ACE, but messages for USER.user2, etc. will be rejected due to the second ACE. Messages for topics not starting with "USER." will be forwarded by the third ACE.

Note that the string in "<topic>USER.user1</topic>" is not a regular expression pattern, and therefore does not need any special escaping or meta characters. The "<pcre-pattern>^USER\..*</pcre-pattern>" is a regular expression, and therefore needs the "^" anchor and the "\." escape sequence.

Timers and Intervals <-

The DRO offers a wide choice of timer and interval options to fine tune its behavior and performance. There are interactions and dependencies between some of these, and if misconfigured, they may cause race or failure conditions.

This manual's description of configuration options (see DRO Configuration Reference), includes identification of such relationships. Please heed them.

Multicast Immediate Messaging Considerations <-

Multicast Immediate Messages (MIMs) may pass through the DRO. You cannot filter MIMs with Access Control Lists (ACL)-MIMs are forwarded to all TRDs. Informatica does not recommend using MIM for messaging traffic across the DRO. MIM is intended for short-lived topics and applications that cannot tolerate a delay between source creation and the sending of the first message. See also Multicast Immediate Messaging.

Persistence Over the DRO <-

The DRO supports UMP persistence by routing all necessary control and retransmission channels along with transport and topic resolution traffic. A typical implementation places the UMP persistent store in the same TRD as its registered source, as shown in the following figure.

The DRO also supports UMP implementations with the store located in a receiver's TRD, as shown in the following figure.

Note: For more reliable operation when using UMP with DROs, Informatica recommends enabling OTR.

Late Join and Off-Transport Recovery <-

The DRO supports sources and receivers configured for Late Join and/or Off-Transport Recovery (OTR). Retransmission requests and subsequent retransmissions are conducted across the entire path through the DRO network. A DRO's proxy sources do not have Late-Join/OTR retention buffers and hence, are not able to provide recovered messages.

Topic Resolution Reliability <-

Topic resolution can sometimes remain in a quiescent phase due to link interruption, preventing needed re-subscription topic resolution activity. Two ways you can address this are:

- For isolated incidents, call lbm_context_topic_resolution_request() (see example lbmtrreq.c). This restarts the sustaining phase.

- For more chronic problems, such as a DRO link (especially an endpoint link) over a WAN of questionable reliability, consider configuring Topic resolution to stay in the sustaining phase (options resolver_advertisement_minimum_sustain_duration (source) and resolver_query_minimum_sustain_duration (receiver)).

BOS and EOS Behavior Over the DRO <-

Through a network of DROs, a topic traverses a separate session for each link along its path. Thus, the DRO reports BOS/EOSs based on the activity between the proxy source transport and its associated receiver. There is no end-to-end, application-to-application reporting of the data path state. Also, in the case of multiple topics being assigned to multiple sessions, topics may find themselves with different session mates from hop to hop. Of course, this all influences when, and for which transport session, a topic's BOSs and EOSs are issued.

DRO Reliable Loss <-

The DRO can create a situation where a "reliable" transport (TCP or LBT-IPC) can experience out-of-order message delivery.

The DRO can perform a "protocol conversion" function. I.e. an originating source can use a UDP-based protocol (LBT-RM or LBT-RU), but the proxy source for a remote receiver can use a "reliable" protocol (TCP or LBT-IPC). With a UDP-based protocol, messages can arrive to the DRO network out of order, usually due to packet loss and recovery. However, when those out-of-order messages are forwarded across a "reliable" protocol (TCP or LBT-IPC), the receiver does not expect the sequence number gap, and immediately declares the out-of-order messages as unrecoverable loss. This, in spite of the fact that the missing message arrives shortly thereafter.

Starting in UM version 6.12, there are two new configuration options: transport_tcp_dro_loss_recovery_timeout (receiver) and transport_lbtipc_dro_loss_recovery_timeout (receiver), which modify the receiver's behavior. Instead of declaring a gap immediately unrecoverable, a delay is introduced which is similar to what a UDP-based receiver uses to wait for lost and retransmitted datagrams. If the missing message arrives within the delay time, the messages are delivered to application without loss.

Be aware that this functionality is only used with "reliable" protocols published by a DRO's proxy source. If this delay feature is enabled, it will not apply to a "reliable" protocol that is received directly from the originating source.

Note however that you can get genuine gaps in the "reliable" data stream without recovery. For example, an overloaded DRO can drop messages. Or a DRO's proxy receiver can experience unrecoverable loss. In that case, the delay will have to expire before the missing messages are declared unrecoverable and subsequent data is delivered.

- Attention

- The delay times default to 0, which retains the pre-6.12 behavior of immediately declaring sequence number gaps unrecoverable. If you want this new behavior, you must configure the appropriate option.

Topology Configuration Examples <-

Following are example configurations for a variety of DRO topologies. These are the topology examples presented Routing Topologies.

In a real-world situation, you would have DRO XML configuration files with their portal interfaces referencing complete UM configuration files. However, for these examples, the referred domain configuration files are simplified to contain only information relevant to the applicable DRO. As part of this simplification, domain configuration files show interfaces for only one or two transport types.

Also, IP addresses are provided in some cases and omitted in other cases. This is because initiator peer portals need to know the IP addresses (and port numbers) of their corresponding acceptor portals to establish connections, whereas endpoint portals communicate via topic resolution and thus, do not need to know IP addresses.

- Note

- Before designing any DRO implementations based on configurations or examples other than the types presented in this document, please contact UM Support.

Direct Link Configuration <-

This example uses a DRO to connect two topic resolution domain LANs.

TRD1 Configuration

This UM configuration file, trd1.cfg, describes TRD1 and is referenced in the DRO configuration file.

G1 Configuration

This DRO configuration file defines two endpoint portals. In the daemon section, we have turned on monitoring for the all endpoint portals in the DRO. The configuration specifies that all statistics be collected every 5 seconds and uses the lbm transport module to send statistics to your monitoring application, which runs in TRD1. See also UM Concepts, Monitoring UMS. The Web Monitor has also been turned on (port 15304) to monitor the performance of the DRO.

TRD2 Configuration

The configuration file trd2.cfg could look something like this.

Peer Link Configuration <-

In cases where the DRO connection between two TRDs must tunnel through a WAN or TCP/IP network, you can implement a DRO at each end, as shown in the example below.

TRD1 Configuration

G1 Configuration

Following is an example of two companion peer portals (on different DROs) configured via DRO XML configuration file for a TCP-only setup. Note that one must be an initiator and the other, an acceptor.

G2 Configuration

TRD2 Configuration

Transit TRD Link Configuration <-

This example, like the previous one, configures two localized DROs tunneling a connection between two TRDs, however, the DROs in this example are tunneling through an intermediate TRD. This has the added effect of connecting three TRDs.

TRD1 Configuration

G1 Configuration

Following is an example of two companion peer portals (on different DROs) configured via DRO XML configuration file for a TCP-only setup. Note that one must be an initiator and the other, an acceptor.

TRD2 Configuration

G2 Configuration

TRD3 Configuration

Parallel Links Configuration <-

This example is similar in purpose to the single link, peer-to-peer example, except that a second pair of DROs is added as a backup route. You can set one of these as a secondary route by assigning a higher cost to portals along the path. In this case we set G3 and G4's portal costs to 5, forcing the lower route to be selected only if the upper (G1, G2) route fails.

Also note that we have configured the peer portals for the leftmost or odd-numbered DROs as initiators, and the rightmost or even-numbered DRO peers as acceptors.

TRD1 Configuration

G1 Configuration

G2 Configuration

G3 Configuration

G4 Configuration

TRD2 Configuration

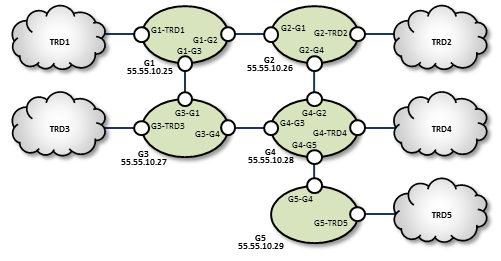

Loop and Spur Configuration <-

TRD1 Configuration

G1 Configuration

G2 Configuration

TRD2 Configuration

TRD3 Configuration

G3 Configuration

G4 Configuration

TRD4 Configuration

G5 Configuration

TRD5 Configuration

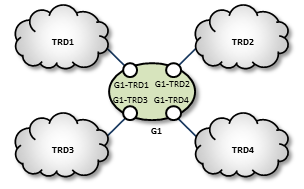

Star Configuration <-

This network consists of four TRDs. Within each TRD, full multicast connectivity exists. However, no multicast connectivity exists between the four TRDs.

G1 Configuration

The configuration for this DRO also has transport statistics monitoring and the WebMonitor turned on.

TRD1 Configuration

TRD2 Configuration

TRD3 Configuration

TRD4 Configuration

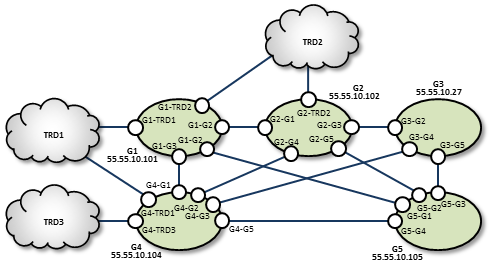

Mesh Configuration <-

The mesh topology utilizes many connections between many nodes, to provide a variety of alternate routes. However, meshes are not the best solution in many cases, as unneeded complexity can increase the chance for configuration errors or make it more difficult to trace problems.

TRD1 Configuration

G1 Configuration

G2 Configuration

G3 Configuration

TRD2 Configuration

TRD3 Configuration

G4 Configuration

G5 Configuration

Using UM Configuration Files with the DRO <-

Within the DRO configuration file, the endpoint portal's <lbm-config> element lets you import configurations from either a plain text or XML UM configuration file. However, using the XML type of UM configuration files provides the following advantages over plain text UM configuration files:

- You can apply UM attributes per topic and/or per context.

- You can apply attributes to all portals on a particular DRO using a UM XML template (instead of individual portal settings).

-

Using UM XML templates to set options for individual portals lets the DRO process these settings in the

<daemon>element instead of within each portal's configuration.

Setting Individual Endpoint Options <-

When setting endpoint options, first name the context of each endpoint in the DRO's XML configuration file.

Then assign configuration templates to those contexts in the UM XML configuration file.

You specify the unique options for each of this DRO's two endpoints in the UM XML configuration <templates> section used for G1-E1-options and G1-E2-options.

DRO and UM XML Configuration Use Cases <-

One advantage of using UM XML configuration files with the DRO is the ability to assign unique UM attributes to the topics and contexts used for the proxy sources and receivers (which plain text UM configuration files cannot do). The following example shows how to assign a different LBTRM multicast address to a source based on its topic.

Create a new UM XML configuration template for the desired topic name.

Then include this template in the <application> element associated with the DRO.

It is also possible to assign UM attributes directly in the <application> tag. For example, the following specifies that a particular topic should use an LBT-RU transport.

Sample Configuration <-

The following sample configuration incorporates many of the examples mentioned above. The DRO applies options to all UM objects created. The UM XML configuration file overwrites these options for two specific topics. The first topic, LBTRM_TOPIC, uses a different template to change its transport from TCP to LBTRM, and to set an additional property. The second topic, LBTRU_TOPIC, also changes its transport from TCP to a new value. However, its new attributes are applied directly in its associated topic tag, instead of referencing a template. In addition, this sample configuration assigns the rm-source template to all sources and receivers associated with the context endpt_1.

XML UM Configuration File <-

XML DRO Configuration File <-

This DRO uses the above XML UM configuration file, sample-config.xml, to set its UM options. It has three endpoints, one of which has the context endpt_1.

Running the DRO Daemon <-

To run the DRO, ensure the following:

- Library environment variable paths are set correctly (LD_LIBRARY_PATH)

- The license environment variable LBM_LICENSE_FILENAME points to a valid DRO license file.

- The configuration file is error free.

Typically, you run the DRO with one configuration file argument, for example:

(FYI: "tnwgd" stands for "Twenty Nine West Gateway Daemon", a historical name for the DRO.)

The DRO logs version information on startup. The following is an example of this information:

DRO NAT Transit <-

Some networks make use of a NAT router to map one IP address space onto another. If your network architecture includes LANs that are bridged with a NAT device, UM receivers will usually not be able to connect directly to UM sources across the NAT. Sources send Topic Resolution advertisements containing their local IP addresses and ports, but receivers on the other side of the NAT cannot access those sources using those local addresses/ports. They must use alternate addresses/ports, which the NAT forwards according to the NAT's configuration.

The recommended method of transiting a NAT is using two DROs connected via a peer link. The UM components in one network are organized as one TRD and the components in the other network are organized as a separate TRD.

There is an alternative method of transiting a NAT with UM using an lbmrd. This method reduces latency but has significant restrictions.

DRO NAT: TCP Peer <-

The simplest way to transit a NAT is to configure the DROs with Router Element "<single-tcp>". One DRO is configured to be the initiator and the other is the acceptor.

Many NAT designs restrict which network can initiate TCP connections across the NAT. The initiator DRO should be placed on the side that supports outgoing connections and configured for the IP address and port that the NAT router recognizes and forwards to the acceptor DRO. This may or may not be the IP address and port of the actual host running the acceptor DRO; contact your network support group for details.

DRO NAT: UDP Peer <-

DROs are sometimes configured to add UDP-based data transport. The TCP link is still used for command and control, so all the points made above still apply. In addition, the NAT must allow forwarding of bi-directional UDP traffic without modifying the destination port numbers.

Here's the sequence of events UM uses to establish communication:

-

The initiator DRO uses the configured address:port to initiate its TCP connection. Once established, the initiator sends DRO the UDP port it's accepting data on.

-

The acceptor DRO accepts the TCP connection, saves the UDP port of the initiator, and sends back it's own UDP port. The acceptor also examines the established TCP connection to determine the IP address of the initiator, and saves that. But note that due to the NAT, the IP address it determines will typically not be the initiator's host IP. Rather, it's an IP address of the NAT device, and the NAT will forward packets to the actual host running the initiator.

-

The initiator receives the acceptor's UDP port and saves it.

-

Now, when the initiator wants to send UDP data to the acceptor, it uses the configured IP and the saved UDP port as the UDP packet's destination IP/port.

- When the acceptor wants to send UDP data to the initiator, it uses the saved IP and UDP port.

Thus, for both the initiator and the acceptor, the configured UDP port is both used to locally bind the UDP socket, and used by the remote DRO as the UDP destination port. Thus, the NAT must not modify the destination port of UDP packets.

Some NAT designs do not expect this UDP behavior. Rather they perform a similar form of dynamic port allocation where a packet's source port is modified, and the networking software is expected to examine the source port and use it for outgoing packets. UM does not do that.

The NAT should be configured with static port mappings for all DRO peer links expected. If this is not practical, then UDP peer links may not be usable. Contact UM Support.

DRO Monitoring <-

See Monitoring for an overview of monitoring an Ultra Messaging network.

It is important to the health and stability of a UM network to monitor the operation of DROs (if any). This monitoring should include real-time automated detection of problems that will produce a timely alert to operations staff.

Three types of data should be monitored:

- Log file.

- UM library statistics (context, source, receiver, wildcard receiver, event queue).

- Daemon statistics (similar data to the DRO Web Monitor).

For UM library stats and daemon stats, the monitoring messages contain an "application ID". For UM applications, this is a user-specified name intended to identify the individual component/instance, and is supplied by the option monitor_appid (context).

However, in the DRO, the application ID is NOT controlled by the "monitor-appid" option, and is instead used to identify not only the specific DRO, but also the portal within the DRO that is supplying the stats.

In the case of the DRO's daemon stats, the application ID is set to the Router Element "<name>" located within the Router Element "<daemon>". For example, a DRO configured with:

The daemon stats will have the application ID "dro1".

In the case of UM library stats (context, transport, event queue), the application ID is constructed as follows:

Gateway_Portal_portalname_portalcontext

Where portalname is set to the Router Element "<name>" located within the Router Element "<endpoint>", and portalcontext is set to either "rcv_ctx" or "src_ctx". For example, a DRO configured with:

The UM library stats will have the application ID "Gateway_Portal_TRD1_rcv_ctx" and "Gateway_Portal_TRD1_src_ctx".

DRO Monitoring: Logs <-

Ideally, log file monitoring would support the following:

- Archive all log messages for all DROs for at least a week, preferably a month.

- Provide rapid access to operations staff to view the latest log messages from a DRO.

- Periodic scans of the log file to detect errors and raise alerts to operations staff.

Regarding log file scanning, messages in the DRO's log file contain a severity indicator in square brackets. For example:

[2022-11-01 13:28:51.720796] [information] Gwd-9574-01: RecalcTrigger:LINK CAME UP:Version = 1:NodeId = 1

Informatica recommends alerting operations staff for messages of severity [WARNING], [ERROR], [CRITICAL], [ALERT], and [EMERGENCY].

It would also be useful to have a set of exceptions for specific messages you wish to ignore.

There are many third party real-time log file analysis tools available. A discussion of possible tools is beyond the scope of UM documentation.

DRO Monitoring: UM Library Stats <-

The DRO communicates with applications using Ultra Messaging protocols, and therefore makes use of the UM library. It is just as important to monitor the UM library statistics for the DRO as it is for applications.

There are two data formats for UM library stats:

- Protobufs - recommended.

- CSV - deprecated. Informatica recommends migrating to protobufs.

For example, here is an excerpt from a sample DRO configuration file that shows how automatic monitoring is enabled:

Here is an excerpt from a sample "um.xml":

Notes:

-

The Router Element "<format-module>" value "pb" selects the protobuf format and is available for the DRO in UM version 6.14 and beyond. Selecting this format implicitly enables the inclusion of the DRO's daemon stats (see below).

-

For a list of possible protobuf messages for the DRO, see the "dro_mon.proto" file at Example dro_mon.proto.

-

The Router Element "<monitor>" enables automatic monitoring and defines the statistics sampling period. In the above example, 600 seconds (10 minutes) is chosen somewhat arbitrarily. Shorter times produce more data, but not much additional benefit. However, UM networks with many thousands of applications may need a longer interval (perhaps 30 or 60 minutes) to maintain a reasonable load on the network and monitoring data storage.

-

When automatic monitoring is enabled, it creates a context named "29west_statistics_context". It is configured with the "mon_ctx" template, which sets options for the monitoring data TRD. (Alternatively, you can configure the monitoring context using monitor_transport_opts (context).) When possible, Informatica recommends directing monitoring data to an administrative network, separate from the application data network. This prevents monitoring data from interfering with application data latency or throughput. In this example, the monitoring context is configured to use an interface matching

10.29.3.0/24. -

In this example, the monitoring data TRD uses Unicast UDP Topic Resolution. The lbmrd daemon is running on host 10.29.3.101, port 12001.

-

The monitoring data is sent out via UM using the TCP transport.

-

These settings were chosen to conform to the recommendations in Automatic Monitoring.

For a full demonstration of monitoring, see: https://github.com/UltraMessaging/mcs_demo

DRO Monitoring: Daemon Stats <-

The daemon statistics for the DRO represent a superset of the information presented on the DRO Web Monitor.

There are two data formats for the DRO to send its daemon stats:

- Protobufs - recommended.

- Binary - deprecated. Informatica recommends migrating to protobufs. For information on the deprecated binary formatted daemon stats, see DRO Binary Daemon Statistics.

The recommended way to enable DRO daemon stats is by enabling UM library stats using the DRO's <monitor> element with <format-module module="pb">. For example, here's an excerpt from a DRO configuration file from https://github.com/UltraMessaging/mcs_demo file um.xml:

<monitor interval="600">

<transport-module module="lbm"/>

<format-module module="pb"/>

</monitor>

The protobufs format is accepted by the Monitoring Collector Service (MCS) and the "lbmmon" example applications: Example lbmmon.c and Example lbmmon.java.

For a list of possible protobuf messages for the DRO, see the "dro_mon.proto" file at Example dro_mon.proto.

For a full demonstration of monitoring, including DRO daemon stats, see: https://github.com/UltraMessaging/mcs_demo

See also DRO Monitoring: UM Library Stats.

DRO Web Monitor <-

- Note

- The DRO web monitor functionality is deprecated in favor of MCS. We do not plan to remove existing web monitor functionality, and will continue to support it in its current state. But we do not plan to enhance the web monitor in the future.

The built-in web monitor (configured in the tnwgd XML configuration file; see DRO Configuration Reference) provides valuable statistics about the DRO and its portals, for which, the Web Monitor separates into receive statistics and send statistics. The Web Monitor provides a page for each endpoint and peer portal.

- Warning

- The DRO's web monitor is not designed to be a highly-secure feature. Anybody with access to the network can access the web monitor pages.

Users are expected to prevent unauthorized access to the web monitor through normal firewalling methods. Users who are unable to limit access to a level consistent with their overall security needs should disable the DRO web monitor (using <web-monitor>). See Webmon Security for more information.

Main Page <-

This page displays general information about the DRO, and also provides the following links to more detailed statistical and configuration information.

- UM Router Configuration

- Displays the DRO XML configuration file used by this DRO.

- Portals

- Displays portal statistics and information, one portal per page. The Portals page allows you to link to any of the Peer or Endpoint portals configured for the DRO.

- Topology Info

- This links to a page that displays DRO network connectivity information from the perspective of this DRO.

- Path Info

- This lets you query and display a hop path that messages will take between any two TRDs.

On some platforms, the Main page may include a link (GNU malloc info) to a memory allocation display page that displays the following:

- arena

- Non-mmapped space allocated (bytes)

- ordblks

- Number of free chunks

- hblks

- Number of mmapped regions

- hblkhd

- Space allocated in mmapped regions (bytes)

- uordblks

- Total allocated space (bytes)

- fordblks

- Total free space (bytes)

Endpoint Portal Page <-

The Endpoint Portal Page displays Receive and Send statistics for the selected endpoint portal. Receive statistics pertain to messages entering the portal from its connected TRD. Send statistics pertain to messages sent out to the TRD.

Click on any of the links at the top of the page to review configuration option values for the portal's UM topic resolution domain. The two columns provide different units of measure for a given statistic type, where the first column is typically in fragments or messages (depending on the statistic type), and the second column is in bytes.

Endpoint Portal name

- Domain ID

- The ID for the Topic Resolution Domain (TRD) to which this portal is connected.

- Portal Cost

- The cost value assigned to this portal.

- Local Interest

- Totals (listed below) for topics and patterns in this portal's interest list that originated from receivers in the immediately adjacent TRD.

- Topics

- Of the local interest total, the number of topics.

- PCRE patterns

- Of the local interest total, the number of wildcard patterns, using PCRE pattern matching.

- REGEX patterns

- Of the local interest total, the number of wildcard patterns, using REGEX pattern matching.

- Remote Interest

- Totals (listed below) for topics and patterns in this portal's interest list that originated from receivers beyond and downstream from the immediately adjacent TRD.

- Topics

- Of the remote interest total, the number of topics.

- PCRE patterns

- Of the remote interest total, the number of wildcard patterns, using PCRE pattern matching.

- REGEX patterns

- Of the remote interest total, the number of wildcard patterns, using REGEX pattern matching.

- Proxy Receivers

- The number of proxy receivers active in this portal.

- Receiver Topics

- The number of topics in which the other portals in the DRO have detected current interest and summarily propagated to this portal.

- Receiver PCRE patterns

- The number of wildcard patterns, using PCRE pattern matching, in which the other portals in the DRO have detected current interest and summarily propagated to this portal.

- Receiver REGEX patterns

- The number of wildcard patterns, using REGEX pattern matching, in which the other portals in the DRO have detected current interest and summarily propagated to this portal.

- Proxy Sources

- The number of proxy sources active in this portal.

Endpoint Receive Statistics

- Transport topic fragments/bytes received

- The total transport-based topic-related traffic of messages containing user data received by this portal from a TRD. The first column counts the number of fragments (or whole messages for messages that were not fragmented).

- Transport topic request fragments/bytes received

- Topic messages received that are request messages, i.e., messages send via lbm_send_request*() rather than lbm_src_send*().

- Transport topic control msgs/bytes received

- The total transport-based topic-related traffic received by this portal from a TRD. These are supervisory messages, which include TSNIs, SRIs., etc. The first column counts the number of messages.

- Immediate topic fragments/bytes received

- The total number of Multicast Immediate Messaging (MIM) messages or message fragments, and bytes (second column), that have a topic, received at this portal.

- Immediate topic request fragments/bytes received

- Of the MIM topic messages received, this is the amount of those that are requests.

- Immediate topicless fragments/bytes received

- The total number of MIM messages or message fragments, and bytes (second column), with null topics, received by his portal.

- Immediate topicless request fragments/bytes received

- Of the MIM topicless messages received, this is the amount of those that are requests.

- Unicast data messages/bytes received

- The total number of Unicast Immediate Messaging (UIM) messages (and bytes, second column) containing user data, received by this portal.

- Duplicate unicast data messages/bytes dropped

- UIM data messages discarded because they were duplicates of messages already received.

- Unicast data messages/bytes received with no stream info

- UIM data messages discarded because they were from an earlier, incompatible version of UM. This counter should stay at 0; otherwise, contact UM Support.

- Unicast data messages/bytes received with no route to destination

- UIM data messages that are on a wrong path, possibly due to a route recalculation. This counter should stay at 0, though it may increment a few messages at the time of a topology change.

- Unicast control messages/bytes received

- The total number of Unicast Immediate Messaging (UIM) supervisory (non-data) messages (and bytes, second column) received by this portal.

- Duplicate unicast control messages/bytes dropped

- Supervisory UIMs dropped because they were duplicates of messages already received.

- Unicast control messages/bytes received with no stream info

- Supervisory UIMs dropped because they were from an earlier, incompatible version of UM. This counter should stay at 0; otherwise, contact UM Support.

- Unicast control messages/bytes received with no route to destination

- Supervisory UIM messages that are on a wrong path, possibly due to a route recalculation. This counter should stay at 0, though it may increment a few messages at the time of a topology change.

Endpoint Send Statistics

- Transport topic fragments/bytes forwarded

- The total transport-based topic-related traffic forwarded to this portal from other portals in this DRO. This could include user messages, TSNIs, SRIs, etc. The first column counts the number of fragments (or whole messages for messages that were not fragmented).

- Transport topic fragments/bytes sent

- Of the transport topic traffic forwarded, this is the amount of traffic sent out to the TRD.

- Transport topic request fragments/bytes sent

- Of the messages sent, this is the amount of those that are requests.

- Duplicate transport topic fragments/bytes dropped

- Of the messages forwarded to this portal, this is the total of those that were discarded because they were duplicates of messages already received.

- Transport topic fragments/bytes dropped due to blocking

- Of the messages forwarded to this portal, this is the amount of those that were discarded because they were blocked from sending, and were unable to be buffered. Message rates on other portals probably exceeded the rate controller limit on this portal.

- Transport topic fragments/bytes dropped due to error

- Of the messages forwarded to this portal, this is the total of those that were discarded due to an application or network connection failure.

- Transport topic fragments/bytes dropped due to fragment size error

- Of the messages forwarded to this portal, this is the total of those that were discarded possibly because of a configuration error. If this count is not at or near 0, verify that maximum datagram size for all transports is the same throughout the network.

- Immediate topic fragments/bytes forwarded

- The total number of Multicast Immediate Messaging (MIM) messages or message fragments, and bytes (second column), forwarded to this portal from other portals in this DRO.

- Immediate topic fragments/bytes sent

- Of the MIM topic messages forwarded to this portal, this is the amount of traffic sent out to the TRD.

- Immediate topic request fragments sent

- Of the MIM topic messages sent, this is the amount of those that are requests.

- Immediate topic fragments/bytes dropped due to blocking

- Of the MIM topic messages forwarded to this portal, this is the amount of those that were discarded because they were blocked from sending, and were unable to be buffered. Message rates on other portals probably exceeded the rate controller limit on this portal.

- Immediate topic fragments/bytes dropped due to error

- Of the MIM topic messages forwarded to this portal, those that were discarded due to an application or network connection failure.

- Immediate topic fragments/bytes dropped due to fragment size error

- Of the MIM topic messages forwarded to this portal, those that were dropped possibly because of a configuration error. If this count is not at or near 0, verify that maximum datagram size for all transports is the same throughout the network.

- Immediate topicless fragments/bytes forwarded

- The total number of Multicast Immediate Messaging (MIM) messages or message fragments, and bytes (second column), with null topics, forwarded to this portal from other portals in this DRO.

- Immediate topicless fragments/bytes sent

- Of the MIM topicless messages forwarded to this portal, this is the amount of traffic sent out to the TRD.

- Immediate topicless request fragments sent

- Of the MIM topicless messages sent, this is the amount of those that are requests.

- Immediate topicless fragments/bytes dropped due to blocking