Ultra Messaging® Concepts

Copyright © 2004 - 2014 Informatica Corporation

March 2014

Informatica Ultra Messaging

Version 5.3

March 2014

Copyright (c) 1998-2014 Informatica Corporation. All rights reserved.

This software and documentation contain proprietary information of Informatica Corporation and are provided under a license agreement containing restrictions on use and disclosure and are also protected by copyright law. Reverse engineering of the software is prohibited. No part of this document may be reproduced or transmitted in any form, by any means (electronic, photocopying, recording or otherwise) without prior consent of Informatica Corporation. This Software may be protected by U.S. and/or international Patents and other Patents Pending.

Use, duplication, or disclosure of the Software by the U.S. Government is subject to the restrictions set forth in the applicable software license agreement and as provided in DFARS 227.7202-1(a) and 227.7702-3(a) (1995), DFARS 252.227-7013(c)(1)(ii) (OCT 1988), FAR 12.212(a) (1995), FAR 52.227-19, or FAR 52.227-14 (ALT III), as applicable.

The information in this product or documentation is subject to change without notice. If you find any problems in this product or documentation, please report them to us in writing.

Informatica, Informatica Platform, Informatica Data Services, PowerCenter, PowerCenterRT, PowerCenter Connect, PowerCenter Data Analyzer, PowerExchange, PowerMart, Metadata Manager, Informatica Data Quality, Informatica Data Explorer, Informatica B2B Data Transformation, Informatica B2B Data Exchange Informatica On Demand, Informatica Identity Resolution, Informatica Application Information Lifecycle Management, Informatica Complex Event Processing, Ultra Messaging and Informatica Master Data Management are trademarks or registered trademarks of Informatica Corporation in the United States and in jurisdictions throughout the world. All other company and product names may be trade names or trademarks of their respective owners.

Portions of this software and/or documentation are subject to copyright held by third parties, including without limitation: Copyright DataDirect Technologies. All rights reserved. Copyright (c) Sun Microsystems. All rights reserved. Copyright (c) RSA Security Inc. All Rights Reserved. Copyright (c) Ordinal Technology Corp. All rights reserved.Copyright (c) Aandacht c.v. All rights reserved. Copyright Genivia, Inc. All rights reserved. Copyright Isomorphic Software. All rights reserved. Copyright (c) Meta Integration Technology, Inc. All rights reserved. Copyright (c) Intalio. All rights reserved. Copyright (c) Oracle. All rights reserved. Copyright (c) Adobe Systems Incorporated. All rights reserved. Copyright (c) DataArt, Inc. All rights reserved. Copyright (c) ComponentSource. All rights reserved. Copyright (c) Microsoft Corporation. All rights reserved. Copyright (c) Rogue Wave Software, Inc. All rights reserved. Copyright (c) Teradata Corporation. All rights reserved. Copyright (c) Yahoo! Inc. All rights reserved. Copyright (c) Glyph & Cog, LLC. All rights reserved. Copyright (c) Thinkmap, Inc. All rights reserved. Copyright (c) Clearpace Software Limited. All rights reserved. Copyright (c) Information Builders, Inc. All rights reserved. Copyright (c) OSS Nokalva, Inc. All rights reserved. Copyright Edifecs, Inc. All rights reserved. Copyright Cleo Communications, Inc. All rights reserved. Copyright (c) International Organization for Standardization 1986. All rights reserved. Copyright (c) ej-technologies GmbH. All rights reserved. Copyright (c) Jaspersoft Corporation. All rights reserved. Copyright (c) is International Business Machines Corporation. All rights reserved. Copyright (c) yWorks GmbH. All rights reserved. Copyright (c) Lucent Technologies. All rights reserved. Copyright (c) University of Toronto. All rights reserved. Copyright (c) Daniel Veillard. All rights reserved. Copyright (c) Unicode, Inc. Copyright IBM Corp. All rights reserved. Copyright (c) MicroQuill Software Publishing, Inc. All rights reserved. Copyright (c) PassMark Software Pty Ltd. All rights reserved. Copyright (c) LogiXML, Inc. All rights reserved. Copyright (c) 2003-2010 Lorenzi Davide, All rights reserved. Copyright (c) Red Hat, Inc. All rights reserved. Copyright (c) The Board of Trustees of the Leland Stanford Junior University. All rights reserved. Copyright (c) EMC Corporation. All rights reserved. Copyright (c) Flexera Software. All rights reserved. Copyright (c) Jinfonet Software. All rights reserved. Copyright (c) Apple Inc. All rights reserved. Copyright (c) Telerik Inc. All rights reserved.

This product includes software developed by the Apache Software Foundation (http://www.apache.org/), and/or other software which is licensed under various versions of the Apache License (the "License"). You may obtain a copy of these Licenses at http://www.apache.org/licenses/. Unless required by applicable law or agreed to in writing, software distributed under these Licenses is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the Licenses for the specific language governing permissions and limitations under the Licenses.

This product includes software which was developed by Mozilla (http://www.mozilla.org/), software copyright The JBoss Group, LLC, all rights reserved; software copyright (c) 1999-2006 by Bruno Lowagie and Paulo Soares and other software which is licensed under various versions of the GNU Lesser General Public License Agreement, which may be found at http:// www.gnu.org/licenses/lgpl.html. The materials are provided free of charge by Informatica, "as-is", without warranty of any kind, either express or implied, including but not limited to the implied warranties of merchantability and fitness for a particular purpose.

The product includes ACE(TM) and TAO(TM) software copyrighted by Douglas C. Schmidt and his research group at Washington University, University of California, Irvine, and Vanderbilt University, Copyright (c) 1993-2006, all rights reserved.

This product includes software developed by the OpenSSL Project for use in the OpenSSL Toolkit (copyright The OpenSSL Project. All Rights Reserved) and redistribution of this software is subject to terms available at http://www.openssl.org and http://www.openssl.org/source/license.html.

This product includes Curl software which is Copyright 1996-2007, Daniel Stenberg, <daniel@haxx.se>. All Rights Reserved. Permissions and limitations regarding this software are subject to terms available at http://curl.haxx.se/docs/copyright.html. Permission to use, copy, modify, and distribute this software for any purpose with or without fee is hereby granted, provided that the above copyright notice and this permission notice appear in all copies.

The product includes software copyright 2001-2005 (c) MetaStuff, Ltd. All Rights Reserved. Permissions and limitations regarding this software are subject to terms available at http://www.dom4j.org/ license.html.

The product includes software copyright (c) 2004-2007, The Dojo Foundation. All Rights Reserved. Permissions and limitations regarding this software are subject to terms available at http://dojotoolkit.org/license.

This product includes ICU software which is copyright International Business Machines Corporation and others. All rights reserved. Permissions and limitations regarding this software are subject to terms available at http://source.icu-project.org/repos/icu/icu/trunk/license.html.

This product includes software copyright (c) 1996-2006 Per Bothner. All rights reserved. Your right to use such materials is set forth in the license which may be found at http:// www.gnu.org/software/ kawa/Software-License.html.

This product includes OSSP UUID software which is Copyright (c) 2002 Ralf S. Engelschall, Copyright (c) 2002 The OSSP Project Copyright (c) 2002 Cable & Wireless Deutschland. Permissions and limitations regarding this software are subject to terms available at http://www.opensource.org/licenses/mit-license.php.

This product includes software developed by Boost (http://www.boost.org/) or under the Boost software license. Permissions and limitations regarding this software are subject to terms available at http:/ /www.boost.org/LICENSE_1_0.txt.

This product includes software copyright (c) 1997-2007 University of Cambridge. Permissions and limitations regarding this software are subject to terms available at http:// www.pcre.org/license.txt.

This product includes software copyright (c) 2007 The Eclipse Foundation. All Rights Reserved. Permissions and limitations regarding this software are subject to terms available at http:// www.eclipse.org/org/documents/epl-v10.php.

This product includes software licensed under the terms at http://www.tcl.tk/software/tcltk/license.html, http://www.bosrup.com/web/overlib/?License, http://www.stlport.org/doc/ license.html, http:// asm.ow2.org/license.html, http://www.cryptix.org/LICENSE.TXT, http://hsqldb.org/web/hsqlLicense.html, http://httpunit.sourceforge.net/doc/ license.html, http://jung.sourceforge.net/license.txt , http://www.gzip.org/zlib/zlib_license.html, http://www.openldap.org/software/release/license.html, http://www.libssh2.org, http://slf4j.org/license.html, http://www.sente.ch/software/OpenSourceLicense.html, http://fusesource.com/downloads/license-agreements/fuse-message-broker-v-5-3- license-agreement; http://antlr.org/license.html; http://aopalliance.sourceforge.net/; http://www.bouncycastle.org/licence.html; http://www.jgraph.com/jgraphdownload.html; http://www.jcraft.com/jsch/LICENSE.txt; http://jotm.objectweb.org/bsd_license.html; . http://www.w3.org/Consortium/Legal/2002/copyright-software-20021231; http://www.slf4j.org/license.html; http://nanoxml.sourceforge.net/orig/copyright.html; http://www.json.org/license.html; http://forge.ow2.org/projects/javaservice/, http://www.postgresql.org/about/licence.html, http://www.sqlite.org/copyright.html, http://www.tcl.tk/software/tcltk/license.html, http://www.jaxen.org/faq.html, http://www.jdom.org/docs/faq.html, http://www.slf4j.org/license.html; http://www.iodbc.org/dataspace/iodbc/wiki/iODBC/License; http://www.keplerproject.org/md5/license.html; http://www.toedter.com/en/jcalendar/license.html; http://www.edankert.com/bounce/index.html; http://www.net-snmp.org/about/license.html; http://www.openmdx.org/#FAQ; http://www.php.net/license/3_01.txt; http://srp.stanford.edu/license.txt; http://www.schneier.com/blowfish.html; http://www.jmock.org/license.html; http://xsom.java.net; and http://benalman.com/about/license/; https://github.com/CreateJS/EaselJS/blob/master/src/easeljs/display/Bitmap.js; http://www.h2database.com/html/license.html#summary; and http://jsoncpp.sourceforge.net/LICENSE.

This product includes software licensed under the Academic Free License http://www.opensource.org/licenses/afl-3.0.php), the Common Development and Distribution License (http://www.opensource.org/licenses/cddl1.php) the Common Public License (http://www.opensource.org/licenses/cpl1.0.php), the Sun Binary Code License Agreement Supplemental License Terms, the BSD License (http:// www.opensource.org/licenses/bsd-license.php) the MIT License (http://www.opensource.org/licenses/mit-license.php) and the Artistic License (http://www.opensource.org/licenses/artistic-license-1.0).

This product includes software copyright (c) 2003-2006 Joe WaInes, 2006-2007 XStream Committers. All rights reserved. Permissions and limitations regarding this software are subject to terms available at http://xstream.codehaus.org/license.html. This product includes software developed by the Indiana University Extreme! Lab. For further information please visit http://www.extreme.indiana.edu/.

This Software is protected by U.S. Patent Numbers 5,794,246; 6,014,670; 6,016,501; 6,029,178; 6,032,158; 6,035,307; 6,044,374; 6,092,086; 6,208,990; 6,339,775; 6,640,226; 6,789,096; 6,820,077; 6,823,373; 6,850,947; 6,895,471; 7,117,215; 7,162,643; 7,243,110, 7,254,590; 7,281,001; 7,421,458; 7,496,588; 7,523,121; 7,584,422; 7676516; 7,720,842; 7,721,270; and 7,774,791, international Patents and other Patents Pending.

DISCLAIMER: Informatica Corporation provides this documentation "as is" without warranty of any kind, either express or implied, including, but not limited to, the implied warranties of noninfringement, merchantability, or use for a particular purpose. Informatica Corporation does not warrant that this software or documentation is error free. The information provided in this software or documentation may include technical inaccuracies or typographical errors. The information in this software and documentation is subject to change at any time without notice.

NOTICES

This Informatica product (the "Software") includes certain drivers (the "DataDirect Drivers") from DataDirect Technologies, an operating company of Progress Software Corporation ("DataDirect") which are subject to the following terms and conditions:

1. THE DATADIRECT DRIVERS ARE PROVIDED "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NON-INFRINGEMENT.

2. IN NO EVENT WILL DATADIRECT OR ITS THIRD PARTY SUPPLIERS BE LIABLE TO THE END-USER CUSTOMER FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, CONSEQUENTIAL OR OTHER DAMAGES ARISING OUT OF THE USE OF THE ODBC DRIVERS, WHETHER OR NOT INFORMED OF THE POSSIBILITIES OF DAMAGES IN ADVANCE. THESE LIMITATIONS APPLY TO ALL CAUSES OF ACTION, INCLUDING, WITHOUT LIMITATION, BREACH OF CONTRACT, BREACH OF WARRANTY, NEGLIGENCE, STRICT LIABILITY, MISREPRESENTATION AND OTHER TORTS.

- Table of Contents

- 1. Introduction

- 2. Fundamental Concepts

- 3. UM Objects

- 4. Architecture

- 5. UMS Features

- 6. Monitoring UMS

- 7. Manpage for lbmrd

This document introduces important fundamental design concepts behind Ultra Messaging® high performance message streaming. Understanding these concepts is important to software developers designing and writing application code that uses the Ultra Messaging Application Programming Interface (API). For information about Parallel Persistence® and queuing, see The Ultra Messaging Guide for Persistence and Queuing.

1. Introduction

Ultra Messaging comprises a software layer, supplied in the form of a dynamic library (shared object), plus a daemon that implements persistence and queuing capabilities. These components provide applications with message delivery functionality that adds considerable value to the basic networking services contained in the host operating system. Applications access Ultra Messaging features through the Ultra Messaging Application Programming Interface (API).

There are actually four APIs: the UM C API, the UM Java API, the UM .NET API and the JMS API . These APIs are very similar, and for the most part this document concentrates on the C API. The translation from C functions to Java or .NET methods should be reasonably straightforward; see the UM Quick Start Guide for sample applications in Java and .NET.

The three most important design goals of Ultra Messaging are to minimize message latency (the time that a given message spends "in transit"), maximize throughput, and insure delivery of all messages under a wide variety of operational and failure scenarios. Ultra Messaging achieves these goals by not duplicating services provided by the underlying network whenever possible. Instead of implementing special messaging servers and daemons to receive and re-transmit messages, Ultra Messaging routes messages primarily with the network infrastructure at wire speed. Placing little or nothing in between the sender and receiver is an important and unique design principle of Ultra Messaging.

2. Fundamental Concepts

A UM application can function either as a source or a receiver. A source application sends messages, and a receiver application receives them. (It is also common for an application to function as both source and receiver; we separate the concepts for organizational purposes.)

This section discusses the following concepts.

2.1. Topic Structure and Management

UM offers the Publish/Subscribe model for messaging ("Pub/Sub"), whereby one or more receiver programs express interest in a topic, and one or more source programs send to that topic. So, a topic can be thought of as a data stream that can have multiple producers and multiple consumers. One of the functions of the messaging layer is to make sure that all messages sent to a given topic are distributed to all receivers listening to that topic. UM does this through an automatic process known as topic resolution.

A topic is just an arbitrary string. For example:

| Deals |

| Market/US/DJIA/Sym1 |

It is not unusual for an application system to have many thousands of topics, perhaps even more than a million, with each one carrying a very specific range of information (e.g. quotes for a single stock symbol).

It is also possible to configure receiving programs to match multiple topics using wildcards. UM uses powerful regular expression pattern matching to allow applications to match topics in a very flexible way. At the present time, messages cannot be sent to wildcarded topic names. See Wildcard Receiver.

2.2. Persistence

UMP - which contains the Ultra Messaging Streaming Edition (UMS) functionality - includes a component known as the persistent store, which provides stable storage (disk or memory) of message streams. UMP delivers a persisted message stream to receiving applications with no additional latency in the vast majority of cases. This offers the functionality of durable subscriptions and confirmed message delivery. Ultra Messaging streaming applications build and run with the UMP persistence feature without modification. See The Ultra Messaging Guide for Persistence and Queuing for more information.

2.3. Queuing

UMQ - which contains both the Ultra Messaging Streaming Edition (UMS) functionality and the Ultra Messaging Persistence Edition (UMP) functionality - includes message queuing capabilities that allows sources to submit messages asynchronously to a queue and permits receivers to retrieve messages from a queue in an entirely different asynchronous manner. UMQ also supports Once and Only Once (OAOO) delivery and Application Sets that allow you to load balance processing or support multiple processing purposes for single topics. See The Ultra Messaging Guide for Persistence and Queuing for more information.

2.4. Late Join

In many applications, a new receiver may be interested in messages that were sent before it existed. UM provides a late join feature that allows a new receiver to join a group of others already listening to a source. Without the late join feature, the joining receiver would only receive messages sent after the time it joined. With late join, the source stores sent messages according to its Late Join configuration options so a joining receiver can receive any of these messages that were sent before it joined the group. See Using Late Join.

2.5. Request/Response

UM also offers a Request/Response messaging model. A sending application (the requester) sends a message to a topic. Every receiving application listening to that topic gets a copy of the request. One or more of those receiving applications (responder) can then send one or more responses back to the original requester. UM sends the request message via the normal pub/sub method, whereas UM delivers the response message directly to the requester.

An important aspect of UM's Request/Response model is that it allows the application to keep track of which request corresponds to a given response. Due to the asynchronous nature of UM requests, any number of requests can be outstanding, and as the responses come in, they can be matched to their corresponding requests.

Request/Response can be used in many ways and is often used during the initialization of UM receiver objects. When an application starts a receiver, it can issue a request on the topic the receiver is interested in. Source objects for the topic can respond and begin publishing data. This method prevents the UM source objects from publishing to a topic without subscribers.

Be careful not to be confused with the sending/receiving terminology. Any application can send a request, including one that creates and manages UM receiver objects. And any application can receive and respond to a request, including one that creates and manages UM source objects.

2.6. Transports

A source application uses an UMS transport to send messages to a receiver application. A UM transport is built on top of a standard IP protocol. The different UM transport types have different tradeoffs in terms of latency, scalability, throughput, bandwidth sharing, and flexibility. The sending application chooses the transport type that is most appropriate for the data being sent, at the topic level. A programmer might choose different transport types for different topics within the same application.

A UM sending application can make use of very many topics (over a million). UM maps those topics onto a much smaller number of transport sessions. A transport session can be thought of as a specific instance of a transport type. A given transport session might carry a single topic, or might carry hundreds of thousands of topics. A receiving application may express interest in a small set of those topics, in which case UM will join the transport session, receiving messages for all topics carried on that transport session. UM will then discard any messages for topics that the application is not interested in. This user-space filtering does consume system resources (primarily CPU and bandwidth), and can be minimized by carefully mapping topics onto transport sessions according to receiving application interest.

Note: Non-multicast UM transport types can also use source-side filtering to decrease user-space filtering on the receiving side by doing the filtering on the sending side. However, while this might sound attractive at first glance, be aware that system resources consumed on the source side affect all receivers, and that the filtering for multiple receivers must be done serially, whereas letting the receivers do the filtering allows that filtering to be done in parallel, only affecting those receivers that need the filtering.

See Transport Objects.

2.6.1. Multi-Transport Threads

Part of UM's design is a single threaded model for message data delivery which reduces latency in the receiving CPU. UM, however, also has the ability to distribute data delivery across multiple CPUs by using a receiving thread pool. Receivers created with the configuration option, use_transport_thread set to 1 use a thread from the thread pool instead of the context thread. The option, receive_thread_pool_size controls the pool size.

As receivers discover new sources through Topic Resolution, UM assigns the network sockets created for the receivers to receive data to either the context thread (default) or to a thread from the pool if use_transport_thread is set for the receiver. It is important to understand that thread assignment occurs at the socket level - not the transport level. Transports aggregated on to the same network socket use the same thread.

UM distributes data from different sockets to different threads allowing better process distribution and higher aggregate throughput. Distributing transports across threads also ensures that activity on each transport has no impact on transports assigned to other threads leading to lower latencies in some traffic patterns, e.g. heavy loss conditions.

The following lists restrictions to using multi-transport threads.

Only LBT-RM, LBT-RU, TCP and TCP-LB transport types may be distributed to threads.

Multi-Transport threads are not supported under sequential mode.

UM processes sources using the same transport socket, e.g. multicast address and port, on the same thread (regardless of the use_transport_thread setting. To leverage threading of different sources, assign each source to a different transport destination, e.g. multicast address/port.

Hot failover sources using LBT-RM on the same topic must not be distributed across threads because they must share the same multicast address and port.

Hot failover sources using other transport types may not be distributed across threads and must use the context thread.

Each transport thread has its own Unicast Listener (request) port. Ultra Messaging recommends that you expand the range request_tcp_port_low - request_tcp_port_high to a larger range when using transport threads. When late join is occurring, UM creates a TCP connection from the transport thread to the source.

Multi-transport threads are not recommended for use over the UM Gateway.

Multi-Transport Threads do not support persistent stores (UMP) or persistent receivers

Multi-Transport Threads do not support queues (UMQ) or queing receivers.

Multi-Transport Threads are not compatible with UMDS Server or UMCache

2.7. Event Delivery

There are many different events that UM may want to deliver to the application. Many events carry data with them (e.g. received messages); some do not (e.g. end-of-stream events). Some examples of UM events:

A received message on a topic that the application has expressed interest in.

A timer expiring. Applications can schedule timers to expire in a desired number of milliseconds (although the OS may not deliver them with millisecond precision).

An application-managed file descriptor event. The application can register its own file descriptors with UM to be monitored for state changes (readable, writable, error, etc).

New source notification. UM can inform the application when sources are discovered by topic resolution.

Receiver loss. UM can inform the application when a data gap is detected that could not be recovered through the normal retransmission mechanism.

End of Stream. UM can inform a receiving application when a data stream (transport session) has terminated.

UM delivers events to the application by

callbacks. The application explicitly gives

UM a pointer to one of its functions to be the handler for a

particular event, and UM calls that function to deliver the event,

passing it the parameters that the application requires to process the

event. In particular, the last parameter of each callback type is a

client data pointer (clientdp).

This pointer can be used at the application's discretion for any purpose.

It's value is specified by the application when the callback function is

identified to UM (typically when UM objects are created), and

that same value is passed back to the application when the callback

function is called.

There are two methods that UM can use to call the application callbacks: through context thread callback, or event queue dispatch.

In the context thread callback method (sometimes called direct callback), the UM context thread calls the application function directly. This offers the lowest latency, but imposes significant restrictions on the application function. See Event Queue Object.

The event queue dispatch of application callback introduces a dynamic buffer into which the UM context thread writes events. The application then uses a thread of its own to dispatch the buffered events. Thus, the application callback functions are called from the application thread, not directly from the context thread.

With event queue dispatching, the use of the application thread to make the callback allows the application function to make full, unrestricted use of the UM API. It also allows parallel execution of UM processing and application processing, which can significantly improve throughput on multi-processor hardware. The dynamic buffering provides resilience between the rate of event generation and the rate of event consumption (e.g. message arrival rate v.s. message processing rate).

In addition, an UM event queue allows the application to be warned when the queue exceeds a threshold of event count or event latency. This allows the application to take corrective action if it is running too slow, such as throwing away all events older than a threshold, or all events that are below a given priority.

2.8. Rate Controls

For UDP-based transports (LBT-RU and LBT-RM), UM network stability is insured through the use of rate controls. Without rate controls, sources can send UDP data so fast that the network can be flooded. Using rate controls, the source's bandwidth usage is limited. If the source attempts to exceed its bandwidth allocation, it is slowed down.

2.9. Operational Statistics

UM maintains a variety of transport-level statistics which gives a real-time snapshot of UM's internal handling. For example, it gives counts for transport messages transferred, bytes transferred, retransmissions requested, unrecoverable loss, etc.

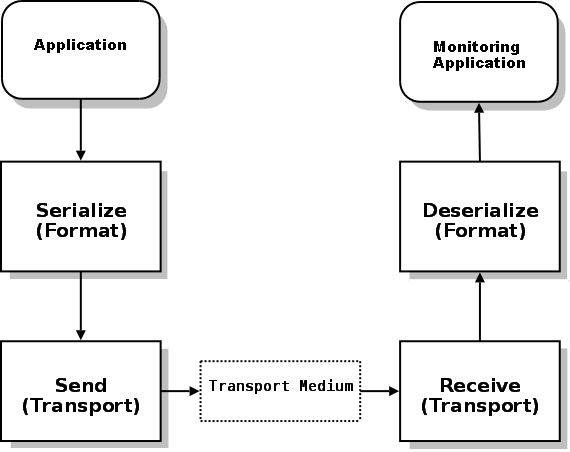

The UM monitoring API provides framework to allow the convenient gathering and transmission of UM statistics to a central monitoring point. See Monitoring UMS.

3. UM Objects

Many UM documents use the term object. Be aware that with the C API, they do not refer to formal objects as supported by C++ (i.e. class instances). The term is used here in an informal sense to denote an entity that can be created, used, and (usually) deleted, has functionality and data associated with it, and is managed through the API. The handle that is used to refer to an object is usually implemented as a pointer to a data structure (defined in lbm.h), but the internal structure of an object is said to be opaque, meaning that application code should not read or write the structure directly.

However, the UM Java JNI and C# .NET APIs are object oriented, with formal Java/C# objects. See the Java documentation and .NET documentation for more information.

This section discusses the following objects.

3.1. Context Object

A UM context object conceptually is an environment in which UM runs. An application creates a context, typically during initialization, and uses it for most other UM operations. In the process of creating the context, UM normally starts an independent thread (the context thread) to do the necessary background processing such as the following.

Topic resolution

Enforce rate controls for sending messages

Manage timers

Manage state

Implement UM protocols

Manage transport sessions

You create a context with lbm_context_create().

Your application can give a context a name with lbm_context_set_name().

Context names are optional but should be unique. UM does not enforce uniqueness, rather issues a log warning

if it encounters duplicate context names. Each context maintains a cache of other contexts it learns

about through context advertisements, which UM sends according to

resolver_context_advertisement_interval.

Context advertisement contains the context's name (if assigned), IP address, request port

(

request_tcp_port)

and a Context Instance ID - an internal value assigned by UM.

If a context needs to know about a context that is not in its cache, it sends a context query, which the

"unknown" context replies to with a context advertisement. This mechanism for naming and

advertising UM contexts facilitates UM Gateway operation

especially for UMP

.

One of the more important functions of a context is to hold configuration information that is of context scope. See the UM Configuration Guide for options that are of context scope.

Most UM applications create a single context. However, there are some specialized circumstances where an application would create multiple contexts. For example, with appropriate configuration options, two contexts can provide separate topic name spaces. Also, multiple contexts can be used to portion available bandwidth across topic sub-spaces (in effect allocating more bandwidth to high-priority topics).

Warning Regardless of the number of contexts created by your application, a good practice is to keep them open throughout the life of your application. Do not close them until you close the application.

3.2. Topic Object

A UM topic object is conceptually very simple; it is little more than a string (the topic name). However, UM uses the topic object to hold a variety of state information used by UM for internal processing. It is conceptually contained within a context. Topic objects must be bound to source or receiver objects.

A data source creates a topic by calling

lbm_src_topic_alloc().

A data receiver doesn't explicitly create topic objects;

UM does that as topics are discovered and cached.

Instead, the receiving application calls

lbm_rcv_topic_lookup() to find the topic object.

Unlike other objects, the topic object is not created or deleted by the

application. UM creates, manages and deletes them internally as needed.

However, the application does use them, so the API has functions

that give the application access to them when needed

(lbm_src_topic_alloc() and

lbm_rcv_topic_lookup()).

3.3. Source Object

A UM source object is used to send messages to the topic that it is bound to. It is conceptually contained within a context.

You create a source object by calling

lbm_src_create().

One of its parameters is a topic object that must have been

previously allocated. A source object can be bound to only one topic.

(A topic object, however, can be bound to many sources provided the sources exist

in separate contexts.)

3.3.1. Message Properties Object

The message properties object allows your application to insert named, typed metadata in topic messages, and to implement functionality that depends on the message properties. UM allows eight property types: boolean, byte, short, int, long, float, double, and string.

To use message properties, create a message properties object with

lbm_msg_properties_create().

Then send topic messages with lbm_src_send_ex() (or

LBMSource.send() in the

Java API or

.NET API) passing the message

properties object through lbm_src_send_ex_info_t

object. Set the LBM_SRC_SEND_EX_FLAG_PROPERTIES flag on the

lbm_src_send_ex_info_t object to indicate that it includes properties.

Upon a receipt of a message with properties, your application can access the properties directly through the messages properties field, which is null if no properties are present. You can retrieve individual property values directly by name, or you can iterate over the collection of properties to determine which properties are present at runtime.

The UM message property object supports the standard JMS message properties specification.

Note: The Message Properties Object does not support receivers using the arrival order without reassembly setting (option value = 0) of ordered_delivery.

3.3.1.1. Message Properties Performance Considerations

UM sends property names on the wire with every message. To reduce bandwidth requirements, minimize the length and number of properties.

When coding sources, consider the following sequence of guidelines:

Allocate a data structure to store message properties objects. This can be a thread-local structure if you use a relatively small number of threads, or a thread-safe pool of objects.

Before sending, retrieve a message properties object from the pool. If an object is not available, create a new object.

Set properties for the message.

Send the message using the appropriate API call, passing in the properties object.

After the send completes, clear the message properties object and return it to the pool.

When coding receivers in Java or .NET, call Dispose() on messages before returning from the application callback. This allows UM to internally recycle objects, and limits object allocation.

3.3.2. Source Configuration and Transport Sessions

As with contexts, a source holds configuration information that is of source scope. This includes network options, operational options and reliability options for LBT-RU and LBT-RM. For example, each source can use a different transport and would therefore configure a different network address to which to send topic messages. See the UM Configuration Guide for source configuration options.

As stated in Transports, many topics (and therefore sources) can be mapped to a single transport. Many of the configuration options for sources actually control or influence transport session activity. If many sources are sending topic messages over a single transport session (TCP, LBT-RU or LBT-RM), UM only uses the configuration options for the first source assigned to the transport.

For example, if the first source to use a LBT-RM transport session sets the transport_lbtrm_transmission_window_size to 24 MB and the second source sets the same option to 2 MB, UMS assigns 24 MB to the transport session's transport_lbtrm_transmission_window_size.

The UM Configuration Guide identifies the source configuration options that may be ignored when UM assigns the source to an existing transport session. Log file warnings also appear when UM ignores source configuration options.

3.3.3. Zero Object Delivery (Source)

The Zero Object Delivery (ZOD) feature for Java and .NET lets sources deliver events to an application with no per-event object creation. (ZOD can also be utilized with context source events.) See Zero Object Delivery (ZOD) for information on how to employ ZOD.

3.4. Receiver Object

A UM receiver object is used to receive messages from the topic that it is bound to. It is conceptually contained within a context. Messages are delivered to the application by an application callback function, specified when the receiver object is created.

You create a receiver object by calling

lbm_rcv_create().

One of its parameters is a topic object that must have been

previously looked up. A receiver object can be bound to only one topic.

Multiple receiver objects can be created for the same topic.

3.4.1. Receiver Configuration and Transport Sessions

A receiver holds configuration information that is of receiver scope. This includes network options, operational options and reliability options for LBT-RU and LBT-RM. See the UM Configuration Guide for receiver configuration options.

As stated above in Source Configuration and Transport Sessions, many topics (and therefore receivers) can be mapped to a single transport. As with source configuration options, many receiver configuration options control or influence transport session activity. If many receivers are receiving topic messages over a single transport session (TCP, LBT-RU or LBT-RM), UM only uses the configuration options for the first receiver assigned to the transport.

For example, if the first receiver to use a LBT-RM transport session sets the transport_lbtrm_nak_generation_interval to 10 seconds and the second receiver sets the same option to 2 seconds, UMS assigns 10 seconds to the transport session's transport_lbtrm_nak_generation_interval.

The UM Configuration Guide identifies the receiver configuration options that may be ignored when UM assigns the receiver to an existing transport session. Log file warnings also appear when UM ignores receiver configuration options.

3.4.2. Wildcard Receiver

A wildcard receiver object is created by calling

lbm_wildcard_rcv_create().

Instead of a topic object, the caller supplies a pattern which is used by

UM to match multiple topics.

Since the application doesn't explicitly lookup the topics, the topic

attribute is passed into lbm_wildcard_rcv_create()

so that options can be set.

Also, wildcarding has its own set of options (e.g. pattern type).

The pattern supplied for wildcard matching is normally a general regular

expression.

There are two types of supported regular expressions that differ somewhat

in the syntax of the patterns

(see the wildcard_receiver pattern_type option in

the UM Configuration Guide).

Those types are:

PCRE- (recommended) the same form of regular expressions recognized by Perl; see http://perldoc.perl.org/perlrequick.html for details, orregex- POSIX extended regular expressions; see http://www.freebsd.org/cgi/man.cgi?query=re_format§ion=7 for details. Note thatregexis not supported on all platforms.

A third type of wildcarding is appcb, in

which the application defines its own algorithm to select topic names.

When appcb is configured, the

pattern parameter of

lbm_wildcard_rcv_create() is ignored.

Instead, an application callback function is configured

(see the wildcard_receiver pattern_callback option in

the UM Configuration Guide).

UM then calls that application function with a topic name and

the function can use whatever method is appropriate to decide if the

topic should be included with the receiver.

Be aware that some platforms may not support all of the

regular expression wildcard types.

For example, UM does not support the use of Unicode PCRE characters in

wildcard receiver patterns on any system that communicates with a HP-UX or AIX system.

See the

UM Knowledgebase article,

Platform-Specific Dependencies

for details.

Also note that if UM topic resolution is configured to turn off

source advertisements, then wildcard receivers must

be configured for PCRE.

The other wildcard types do not support receiver queries for topic

resolution.

For an example of wildcard usage, see lbmwrcv.c

Users of TIBCO® SmartSockets™ will want to look at the UM Knowledgebase article, Wildcard Topic Regular Expressions.

3.4.3. Zero Object Delivery (ZOD)

The Zero Object Delivery (ZOD) feature for Java and .NET lets receivers (and sources) deliver messages and events to an application with no per-message or per-event object creation. This facilitates source/receiver applications that would require little to no garbage collection at runtime, producing lower and more consistent message latencies.

To take advantage of this feature, you must call dispose() on a message to mark it as

available for reuse. To access data from the message when using ZOD, you use a specific pair of

LBMMessage-class methods (see below) to extract message data directly from the message, rather than the

standard data() method. Using the latter method creates a byte array, and consequently, an

object. It is the subsequent garbage collecting to recycle those objects that can affect performance.

For using ZOD, the LBMMessage class methods are:

Java:

dispose(),dataBuffer(), anddataLength().NET:

dispose(),dataPointer(), andlength()

On the other hand, you may need to keep the message as an object for further use after callback. In this case,

ZOD is not appropriate and you must call promote() on the message, and also you can use

data() to extract

message data.

For more details see the Java API Overview or the .Net LBMMessage Class description. This feature does not apply to the C API.

3.5. Event Queue Object

A UM event queue object is conceptually a managed data and control buffer. UM delivers events (including received messages) to your application by means of application callback functions. Without event queues, these callback functions are called from the UM context thread, which places the following restrictions on the application function being called:

The application function is not allowed to make certain API calls (mostly having to do with creating or deleting UM objects).

The application function must execute very quickly without blocking.

The application does not have control over when the callback executes. It can't prevent callbacks during critical sections of application code.

Some circumstances require the use of UM event queues. As mentioned above, if the receive callback needs to use UM functions that create or delete objects. Or if the receive callback performs operations that potentially block. You may also want to use an event queue if the receive callback is CPU intensive and can make good use of multiple CPU hardware. Not using an event queue provides the lowest latency, however, high message rates or extensive message processing can negate the low latency benefit if the context thread continually blocks.

Of course, your application can create its own queues, which can be bounded, blocking queues or unbounded, non-blocking queues. For transports that are flow-controlled, a bounded, blocking application queue preserves flow control in your messaging layer because the effect of a filled or blocked queue extends through the message path all the way to source. The speed of the application queue becomes the speed of the source.

UM event queues are unbounded, non-blocking queues and provide the following unique features.

Your application can set a queue size threshold with queue_size_warning and be warned when the queue contains too many messages.

Your application can set a delay threshold with queue_delay_warning and be warned when events have been in the queue for too long.

The application callback function has no UM API restrictions.

Your application can control exactly when UM delivers queued events with

lbm_event_dispatch(). And you can have control return to your application either when specifically asked to do so (by callinglbm_event_dispatch_unblock()), or optionally when there are no events left to deliver.Your application can take advantage of parallel processing on multiple processor hardware since UM processes asynchronously on a separate thread from your application's processing of received messages. By using multiple application threads to dispatch an event queue, or by using multiple event queues, each with its own dispatch thread, your application can further increase parallelism.

You create an UM event queue in

the C API by calling

lbm_event_queue_create().

In the Java API and the

.NET API, use the

LBMEventQueue class.

An event queue object also holds configuration information that is of

event queue scope.

See Event Queue Options.

3.6. Transport Objects

This section discusses the following topics.

3.6.1. Transport TCP

The TCP UMS transport uses normal TCP connections to send messages from sources to receivers. This is the default transport when it's not explicitly set. TCP is a good choice when:

Flow control is desired. I.e. when one or more receivers cannot keep up, it is desired to slow down the source. This is a "better late than never" philosophy.

Equal bandwidth sharing with other TCP traffic is desired. I.e. when it is desired that the source slow down when general network traffic becomes heavy.

There are few receivers listening to each topic. Multiple receivers for a topic requires multiple transmissions of each message, which places a scaling burden on the source machine and the network.

The application is not sensitive to latency. Use of TCP as a messaging transport can result in unbounded latency.

The messages must pass through a restrictive firewall which does not pass multicast traffic.

Note: TCP transports may be distributed to receiving threads. See Multi-Transport Threads for more information.

3.6.2. Transport TCP-LB

The TCP-LB UMS transport is a variation on the TCP transport which adds latency-bounded behavior. The source is not flow-controlled as a result of network congestion or slow receivers. So, for applications that require a "better never than late" philosophy, TCP-LB can be a better choice.

However, latency cannot be controlled as tightly as with UDP-based transports (see below). In particular, latency can still be introduced because TCP-LB shares bandwidth equally with other TCP traffic. It also has the same scaling issues as TCP when multiple receivers are present for each topic.

Note: TCP-LB transports may be distributed to receiving threads. See Multi-Transport Threads for more information.

3.6.3. Transport LBT-RU

The LBT-RU UMS transport adds reliable delivery to unicast UDP to send messages from sources to receivers. This provides greater flexibility in the control of latency. For example, the application can further limit latency by allowing the use of arrival order delivery. See the UM Knowledgebase FAQ, Why can't I have low-latency delivery and in-order delivery?. Also, LBT-RU is less sensitive to overall network load; it uses source rate controls to limit its maximum send rate.

Since it is based on unicast addressing, LBT-RU can pass through most firewalls. However, it has the same scaling issues as TCP when multiple receivers are present for each topic.

Note: LBT-RU can use Datagram Bypass Layer (DBL) acceleration in conjunction with DBL-enabled Myricom® 10-Gigabit Ethernet NICs for Linux and Microsoft® Windows®. DBL is a kernel-bypass technology that accelerates sending and receiving UDP traffic. See Transport Acceleration Options for more information.

Note: LBT-RU transports may be distributed to receiving threads. See Multi-Transport Threads for more information.

3.6.4. Transport LBT-RM

The LBT-RM UMS transport adds reliable multicast to UDP to send messages. This provides the maximum flexibility in the control of latency. In addition, LBT-RM can scale effectively to large numbers of receivers per topic using network hardware to duplicate messages only when necessary at wire speed. One limitation is that multicast is often blocked by firewalls.

LBT-RM is a UDP-based, reliable multicast protocol designed with the use of UM and its target applications specifically in mind. The protocol is very similar to PGM, but with changes to aid low latency messaging applications.

Topic Mapping - Several topics may map onto the same LBT-RM session. Thus a multiplexing mechanism to LBT-RM is used to distinguish topic level concerns from LBT-RM session level concerns (such as retransmissions, etc.). Each message to a topic is given a sequence number in addition to the sequence number used at the LBT-RM session level for packet retransmission.

Negative Acknowledgments (NAKs) - LBT-RM uses NAKs as PGM does. NAKs are unicast to the sender. For simplicity, LBT-RM uses a similar NAK state management approach as PGM specifies.

Time Bounded Recovery - LBT-RM allows receivers to specify a a maximum time to wait for a lost piece of data to be retransmitted. This allows a recovery time bound to be placed on data that has a definite lifetime of usefulness. If this time limit is exceeded and no retransmission has been seen, then the piece of data is marked as an unrecoverable loss and the application is informed. The data stream may continue and the unrecoverable loss will be ordered as a discrete event in the data stream just as a normal piece of data.

Flexible Delivery Ordering - LBT-RM receivers have the option to have the data for an individual topic delivered "in order" or "arrival order". Messages delivered "in order" will arrive in sequence number order to the application. Thus loss may delay messages from being delivered until the loss is recovered or unrecoverable loss is determined. With "arrival-order" delivery, messages will arrive at the application as they are received by the LBT-RM session. Duplicates are ignored and lost messages will have the same recovery methods applied, but the ordering may not be preserved. Delivery order is a topic level concern. Thus loss of messages in one topic will not interfere or delay delivery of messages in another topic.

Session State Advertisements - In PGM, SPM packets are used to advertise session state and to perform PGM router assist in the routers. For LBT-RM, these advertisements are only used when data is not flowing. Once data stops on a session, advertisements are sent with an exponential back-off (to a configurable maximum interval) so that the bandwidth taken up by the session is minimal.

Sender Rate Control - LBT-RM can control a sender's rate of injection of data into the network by use of a rate limiter. This rate is configurable and will back pressure the sender, not allowing the application to exceed the rate limit it has specified. In addition, LBT-RM senders have control over the rate of retransmissions separately from new data. This allows sending application to guarantee a minimum transmission rate even in the face of massive loss at some or all receivers.

Low Latency Retransmissions - LBT-RM senders do not mandate the use of NCF packets as PGM does. Because low latency retransmissions is such an important feature, LBT-RM senders by default send retransmissions immediately upon receiving a NAK. After sending a retransmission, the sender ignores additional NAKs for the same data and does not repeatedly send NCFs. The oldest data being requested in NAKs has priority over newer data so that if retransmissions are rate controlled, then LBT-RM sends the most important retransmissions as fast as possible.

Note: LBT-RM can use Datagram Bypass Layer (DBL) acceleration in conjunction with DBL-enabled Myricom 10-Gigabit Ethernet NICs for Linux and Microsoft Windows. DBL is a kernel-bypass technology that accelerates sending and receiving UDP traffic. See Transport Acceleration Options for more information.

Note: LBT-RM transports may be distributed to receiving threads. See Multi-Transport Threads for more information.

3.6.5. Transport LBT-IPC

The LBT-IPC transport is an Interprocess Communication (IPC) UM transport that allows sources to publish topic messages to a shared memory area managed as a static ring buffer from which receivers can read topic messages. Message exchange takes place at memory access speed which can greatly improve throughput when sources and receivers can reside on the same host. LBT-IPC can be either source-paced or receiver-paced.

The LBT-IPC transport uses a "lock free" design that eliminates calls to the Operating System and allows receivers quicker access to messages. An internal validation method enacted by receivers while reading messages from the Shared Memory Area ensures message data integrity. The validation method compares IPC header information at different times to ensure consistent, and therefore, valid message data. Sources can send individual messages or a batch of messages, each of which possesses an IPC header.

Note: Transport LBT-IPC is not supported on the HP NonStop® platform.

3.6.5.1. LBT-IPC Shared Memory Area

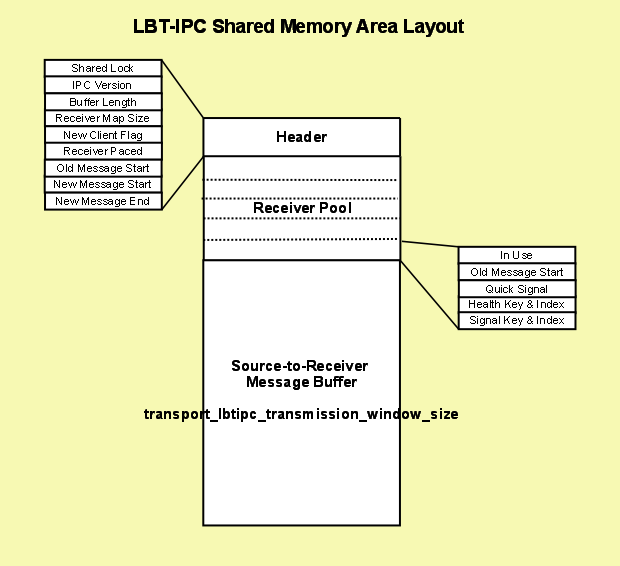

The following diagram illustrates the Shared Memory Area used for LBT-IPC.

3.6.5.1.1. Header

The Header contains information about the shared memory area resource.

Shared Lock - shared receiver pool semaphore (mutex on Microsoft Windows) to ensure mutually exclusive access to the receiver pool.

Version - LBT-IPC version number which is independent of any UM product version number.

Buffer Length - size of shared memory area.

Receiver Map Size - Number of entries available in the Receiver Pool which you configure with the source option, transport_lbtipc_maximum_receivers_per_transport.

New Client Flag - set by the receiver after setting its Receiver Pool entry and before releasing the Shared Lock. Indicates to the source that a new receiver has joined the transport.

Receiver Paced - Indicates if you've configured the transport for receiver-pacing.

Old Message Start - pointer indicating messages that may be reclaimed.

New Message Start - pointer indicating messages that may be read.

New Message End - pointer indicating the end of messages that may be read, which may not be the same as the Old Message Start pointer.

3.6.5.1.2. Receiver Pool

The receiver pool is a collection of receiver connections maintained in the Shared Memory Area. The source reads this information if you've configured receiver-pacing to determine if a message can be reclaimed or to monitor a receiver. Each receiver is responsible for finding a free entry in the pool and marking it as used.

In Use flag - set by receiver while holding the Shared Lock, which effectively indicates the receiver has joined the transport session. Using the Shared Lock ensures mutually exclusive access to the receiver connection pool.

Oldest Message Start - set by receiver after reading a message. If you enable receiver-pacing the source reads it to determine if message memory can be reclaimed.

Monitor Shared Lock - checked by the source to monitor a receiver. (semaphore on Linux, event on Microsoft Windows) See Receiver Monitoring.

Signal Shared Lock - Set by source to notify receiver that new data has been written. (semaphore on Linux, mutex on Microsoft Windows) If you set transport_lbtipc_receiver_thread_behavior to busy_wait, the receiver sets this semaphore to zero and the source does not notify.

3.6.5.1.3. Source-to-Receiver Message Buffer

This area contains message data. You specify the size of the shared memory area with a source option, transport_lbtipc_transmission_window_size. The size of the shared memory area cannot exceed your platform's shared memory area maximum size. UM stores the memory size in the shared memory area's header. The Old Message Start and New Message Start point to positions in this buffer.

3.6.5.2. Sources and LBT-IPC

When you create a source with lbm_src_create() and you've set the transport option to IPC, UM creates a shared memory area object. UM assigns one of the transport IDs to this area specified with the UM context configuration options, transport_lbtipc_id_high and transport_lbtipc_id_low. You can also specify a shared memory location outside of this range with a source configuration option, transport_lbtipc_id, to prioritize certain topics, if needed.

UM names the shared memory area object according to the format, LBTIPC_%x_%d where %x is the hexadecimal Session ID and %d is the decimal Transport ID. Examples names are LBTIPC_42792ac_20000 or LBTIPC_66e7c8f6_20001. Receivers access a shared memory area with this object name to receive (read) topic messages.

Using the configuration option, transport_lbtipc_behavior, you can choose source-paced or receiver-paced message transport. See Transport LBT-IPC Operation Options.

3.6.5.2.1. Sending over LBT-IPC

To send on a topic (write to the shared memory area) the source writes to the Shared Memory Area starting at the Oldest Message Start position. It then increments each receiver's Signal Lock if the receiver has not set this to zero.

3.6.5.3. Receivers and LBT-IPC

Receivers operate identically to receivers for all other UM transports. A receiver can actually receive topic messages from a source sending on its topic over TCP, LBT-RU or LBT-RM and from a second source sending on LBT-IPC with out any special configuration. The receiver learns what it needs to join the LBT-IPC session through the topic advertisement.

3.6.5.3.1. Topic Resolution and LBT-IPC

Topic resolution operates identically with LBT-IPC as other UM transports albeit with a new advertisement type, LBMIPC. Advertisements for LBT-IPC contain the Transport ID, Session ID and Host ID. Receivers obtain LBT-IPC advertisements in the normal manner (resolver cache, advertisements received on the multicast resolver address:port and responses to queries.) Advertisements for topics from LBT-IPC sources can reach receivers on different machines if they use the same topic resolution configuration, however, those receivers silently ignore those advertisements since they cannot join the IPC transport. See Sending to Both Local and Remote Receivers.

3.6.5.3.2. Receiver Pacing

Although receiver pacing is a source behavior option, some different things must happen on the receiving side to ensure that a source does not reclaim (overwrite) a message until all receivers have read it. When you use the default transport_lbtipc_behavior (source-paced), each receiver's Oldest Message Start position in the Shared Memory Area is private to each receiver. The source writes to the Shared Memory Area independently of receivers' reading. For receiver-pacing, however, all receivers share their Oldest Message Start position with the source. The source will not reclaim a message until all receivers have successfully read that message.

3.6.5.3.3. Receiver Monitoring

To ensure that a source does not wait on a receiver that is not running, the source monitors a receiver via the Monitor Shared Lock allocated to each receiving context. (This lock is in addition to the semaphore already allocated for signaling new data.) A new receiver takes and holds the Monitor Shared Lock and releases the resource when it dies. If the source is able to obtain the resource, it knows the receiver has died. The source then clears the receiver's In Use flag in it's Receiver Pool Connection.

3.6.5.4. Similarities with Other UM Transports

Although no actual network transport occurs, UM functions in much the same way as if you send packets across the network as with other UM transports.

If you use a range of LBT-IPC transport IDs, UM assigns multiple topics sent by multiple sources to all the transport sessions in a round robin manner just like other UM transports.

Transport sessions assume the configuration option values of the first source assigned to the transport session.

Sources are subject to message batching.

3.6.5.5. Differences from Other UM Transports

Unlike LBT-RM which uses a transmission window to specify a buffer size to retain messages in case they must be retransmitted, LBT-IPC uses the transmission window option to establish the size of the shared memory.

LBT-IPC does not retransmit messages. Since LBT-IPC transport is essentially a memory write/read operation, messages should not be be lost in transit. However, if the shared memory area fills up, new messages overwrite old messages and the loss is absolute. No retransmission of old messages that have been overwritten occurs.

Receivers also do not send NAKs when using LBT-IPC.

LBT-IPC does not support Ordered Delivery options. However, if you set ordered_delivery 1 or -1, LBT-IPC reassembles any large messages.

LBT-IPC does not support Rate Control.

LBT-IPC creates a separate receiver thread in the receiving context.

3.6.5.6. Sending to Both Local and Remote Receivers

A source application that wants to support both local and remote receivers should create two UM Contexts with different topic resolution configurations, one for IPC sends and one for sends to remote receivers. Separate contexts allows you to use the same topic for both IPC and network sources. If you simply created two source objects (one IPC, one say LBT-RM) in the same UM Context, you would have to use separate topics and suffer possible higher latency because the sending thread would be blocked for the duration of two send calls.

A UM source will never automatically use IPC when the receivers are local and a network transport for remote receivers because the discovery of a remote receiver would hurt the performance of local receivers. An application that wants transparent switching can implement it in a simple wrapper.

3.6.5.7. LBT-IPC Object Diagram

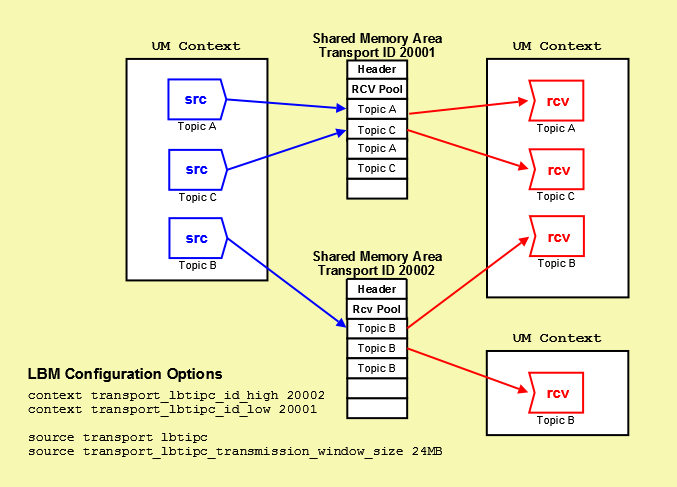

The following diagram illustrates how sources and receivers interact with the shared memory area used in the LBT-IPC transport.

In the diagram above, 3 sources send (write) to two Shared Memory Areas while four receivers in two different contexts receive (read) from the areas. The diagram also shows the UM configuration options that set up this scenario. The assignment of sources to Shared Memory Areas demonstrate UM's round robin method. UM assigns the source sending on Topic A to Transport 20001, the source sending on Topic B to Transport 20002 and the source sending on Topic C back to the top of the transport ID range, 20001. The memory area size, although the default value, appears for illustration.

3.6.5.8. Required Authorities

LBT-IPC requires no special operating system authorities, except on Microsoft Windows Vista® and Microsoft Windows Server 2008, which require Administrator privileges. In addition, on Microsoft Windows XP, applications must be started by the same user, however, the user is not required to have administrator privileges. In order for applications to communicate with a service, the service must use a user account that has Administrator privileges.

3.6.5.9. Host Resource Usage and Limits

LBT-IPC contexts and sources consume host resources as follows.

Per Source - 1 shared memory segment, 1 shared lock (semaphore on Linux, mutex on Microsoft Windows)

Per Receiving Context - 2 shared locks (semaphores on Linux, one event and one mutex on Microsoft Windows)

Across most operating system platforms, these resources have the following limits.

4096 shared memory segments, though some platforms use different limits

32,000 shared semaphores (128 shared semaphore sets * 250 semaphores per set)

Consult your operating system documentation for specific limits per type of resource. Resources may be displayed and reclaimed using the LBT-IPC Resource Manager. See also Managing LBT-IPC Host Resources.

3.6.5.10. LBT-IPC Resource Manager

Deleting an IPC source with lbm_src_delete() or deleting an IPC receiver with lbm_rcv_delete() reclaims the shared memory area and locks allocated by the IPC source or receiver. However, if a less than graceful exit from a process occurs, global resources remain allocated but unused. To address this possibility, the LBT-IPC Resource Manager maintains a resource allocation database with a record for each global resource (memory or semaphore) allocated or freed. You can use the LBT-IPC Resource Manager to discover and reclaim resources. See the three example outputs below.

3.6.5.10.1. Displaying Resources

$> lbtipc_resource_manager Displaying Resources (to reclaim you must type '-reclaim' exactly) --Memory Resources-- Memory resource: Process ID: 24441 SessionID: ab569cec XportID: 20001 --Semaphore Resources-- Semaphore key: 0x68871d75 Semaphore resource Index 0: reserved Semaphore resource: Process ID: 24441 Sem Index: 1 Semaphore resource: Process ID: 24436 Sem Index: 2

3.6.5.10.2. Reclaiming Unused Resources

$> lbtipc_resource_manager -reclaim Reclaiming Resources Process 24441 not found: reclaiming Memory resource (SessionID: ab569cec XPortID: 20001) Process 24441 not found: reclaiming Semaphore resource: Key: 0x68871d75 Sem Index: 1 Process 24436 not found: reclaiming Semaphore resource: Key: 0x68871d75 Sem Index: 2

3.6.5.10.3. Discovering Resources In Use

$> lbtipc_resource_manager -reclaim Reclaiming Resources Process 24441 still active! Memory resource not reclaimed (SessionID: ab569cec XPortID: 20001) Process 24441 still active! Semaphore resource not reclaimed (Key: 0x68871d75 Sem Index: 1) Process 24436 still active! Semaphore resource not reclaimed (Key: 0x68871d75 Sem Index: 2)

3.6.6. Transport LBT-RDMA

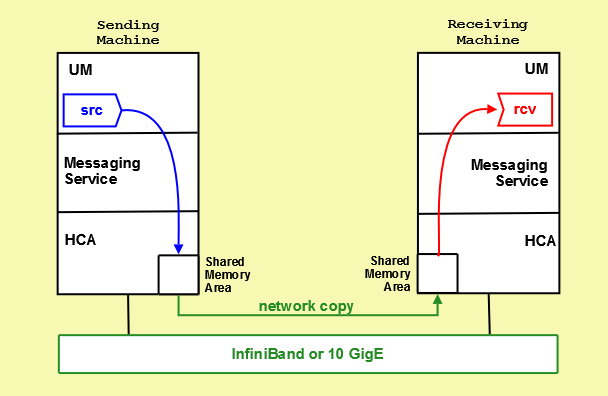

The LBT-RDMA transport is Remote Direct Memory Access (RDMA) UM transport that allows sources to publish topic messages to a shared memory area from which receivers can read topic messages. LBT-RDMA runs across InfiniBand and 10 Gigabit Ethernet hardware.

Note: Use of the LBT-RDMA transport requires the purchase and installation of the Ultra Messaging RDMA Transport Module. See your Ultra Messaging representative for licensing specifics.

Note: Transport LBT-RDMA is not supported on the HP NonStop platform.

When you create a source with lbm_src_create() and you've set the transport option to RDMA, UM creates a shared memory area object on the sending machine's Host Channel Adapter (HCA) card. UM assigns one of the RDMA transport ports to this area specified with the UM context configuration options, transport_lbtrdma_port_high and transport_lbtrdma_port_low. You can also specify a shared memory location outside of this range with a source configuration option, transport_lbtrdma_port, to prioritize certain topics, if needed.

When you create a receiver with lbm_rcv_create() for a topic being sent over LBT-RDMA, UM creates a shared memory area on the receiving machine's HCA card. The network hardware immediately copies any new data from the sending HCA to the receiving HCA. UM receivers monitor the receiving shared memory area for new topic messages. You configure receiver monitoring with transport_lbtrdma_receiver_thread_behavior.

3.6.6.1. LBT-RDMA Object Diagram

The following diagram illustrates how sources and receivers interact with the shared memory area used in the LBT-RDMA transport.

3.6.6.2. Similarities with Other UMS Transports

UM functions in much the same way as if you send packets across a traditional Ethernet network as with other UM transports.

If you use a range of ports, UM assigns multiple topics that have been sent by multiple sources in a round robin manner to all the transport sessions configured my the port range.

Transport sessions assume the configuration option values of the first source assigned to the transport session.

Sources are subject to message batching.

Topic resolution operates identically with LBT-RDMA as other UM transports albeit with a new advertisement type, LBMRDMA.

3.6.6.3. Differences from Other UMS Transports

Unlike LBT-RM which uses a transmission window to specify a buffer size to retain messages in case they must be retransmitted, LBT-RDMA uses the transmission window option to establish the size of the shared memory.

LBT-RDMA does not retransmit messages. Since LBT-RDMA transport is essentially a memory write/read operation, messages should not be be lost in transit. However, if the shared memory area fills up, new messages overwrite old messages and the loss is absolute. No retransmission of old messages that have been overwritten occurs.

Receivers also do not send NAKs when using LBT-RDMA.

LBT-RDMA does not support Ordered Delivery. However, if you set ordered_delivery 1 or -1, LBT-RDMA reassembles any large messages.

LBT-RDMA does not support Rate Control.

LBT-RDMA creates a separate receiver thread in the receiving context.

4. Architecture

UM is designed to be a flexible architecture. Unlike many messaging systems, UM does not require an intermediate daemon to handle routing issues or protocol processing. This increases the performance of UM and returns valuable computation time and memory back to applications that would normally be consumed by messaging daemons.

This section discusses the following topics.

4.1. Embedded Mode

When you create a context (lbm_context_create()) with the operational_mode set to embedded (the default), UM creates an independent thread, called the context thread, which handles timer and socket events, and does protocol-level processing, like retransmission of dropped packets.

4.2. Sequential Mode

When you create a context (lbm_context_create()) with the

operational_mode set to sequential,

the context thread is NOT created. It becomes the application's responsibility to

donate a thread to UM by calling lbm_context_process_events()

regularly, typically in a tight loop. Use Sequential mode for

circumstances where your application wants control over the attributes of the context

thread. For example, some applications raise the priority of the context thread so

as to obtain more consistent latencies.

In sequential mode, no separate thread is spawned when a context is created.

You enable Sequential mode with the following configuration option.

context operational_mode sequential

4.3. Topic Resolution

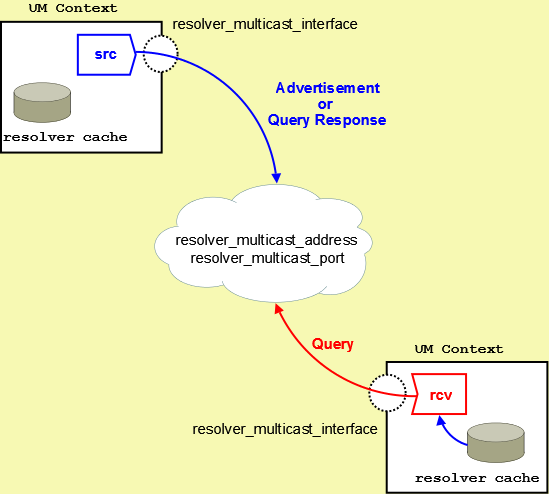

Topic resolution is the discovery of a topic's transport session information by a receiver to enable the receipt of topic messages. By default, UM relies on multicast requests and responses to resolve topics to transport sessions. (You can also use Unicast requests and responses, if needed.) UM receivers multicast their topic requests, or queries, to an IP multicast address and UDP port ( resolver_multicast_address and resolver_multicast_port). UM sources also multicast their advertisements and responses to receiver queries to the same multicast address and UDP port.

Topic Resolution offers 3 distinct phases that can be implemented.

Initial Phase - Period that allows you to resolve a topic aggressively. Can be used to resolve all known topics before message sending begins. This phase can be configured to run differently from the defaults or completely disabled.

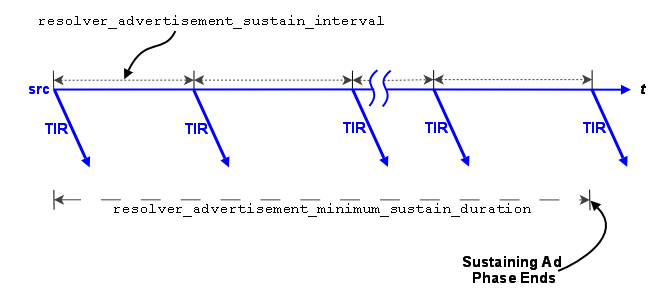

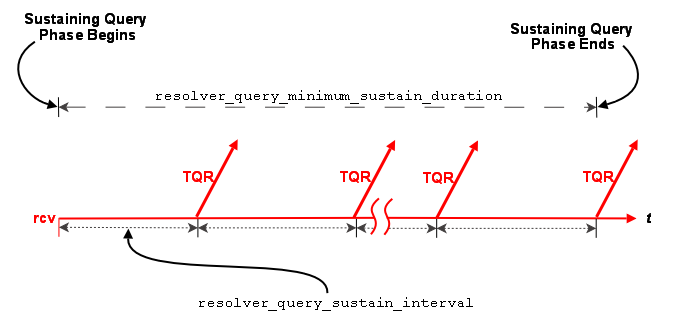

Sustaining Phase - Period that allows new receivers to resolve a topic after the Initial Phase. Can also be the primary period of topic resolution if you disable the Initial Phase. This phase can also be configured to run differently from the defaults or completely disabled.

Quiescent Phase - The "steady state" period during which a topic is resolved and UM uses no system resources for topic resolution.

This section discusses the following topics.

4.3.1. Multicast Topic Resolution

The following diagram depicts the UM topic resolution using multicast.

UM performs topic resolution automatically. Your application does not need to call any API functions to initiate topic resolution, however, you can influence topic resolution with Topic Resolution Configuration Options. Moreover, you can set configuration options for individual topics by using the lbm_*_attr_setopt() functions in your application. See Assigning Different Configuration Options to Individual Topics

Note: Multicast topic resolution traffic can use Datagram Bypass Layer (DBL) acceleration in conjunction with DBL-enabled Myricom 10-Gigabit Ethernet NICs for Linux and Microsoft Windows. DBL is a kernel-bypass technology that accelerates sending and receiving UDP traffic. See Transport Acceleration Options for more information.

Note: Multicast Topic Resolution is not supported directly on the HP NonStop platform, but can be run a different host within your network supplying topic resolution services to sources and receivers running on HP NonStop platform.

4.3.1.1. Sources Advertise

UM sources help UM receivers discover transport information in the following ways.

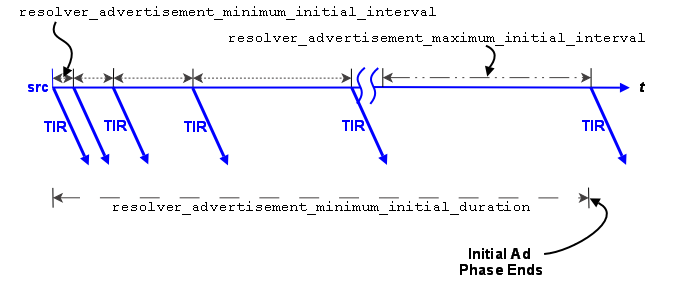

Advertise Active Topics - Each source advertises its active topic first upon its creation and subsequently according to the resolver_advertisement_*_interval configuration options for the Initial and Sustaining Phases. Sources advertise by sending a Topic Information Record (TIR). (You can prevent a source from sending an advertisement upon creation with resolver_send_initial_advertisement.)

Respond to Topic Queries - Each source responds immediately to queries from receivers about its topic.

Both a topic advertisement and a query response contain the topic's transport session information. Based on the transport type, a receiver can join the appropriate multicast group (for LBT-RM), send a connection request (for LBT-RU), connect to the source (for TCP) or access a shared memory area (for LBT-IPC). The address and port information potentially contained within a TIR includes:

For a TCP transport, the source address and TCP port.

For an LBT-RM transport, the unicast UDP port (to which NAKs are sent) and the UDP destination port.

For an LBT-RU transport, the source address and UDP port.

For an LBT-IPC transport, the Host ID, LBT-IPC Session ID and Transport ID.

For the UM Gateway, the context instance and Domain ID of the original source plus the Hop Count and Portal Cost. See Forwarding Costs

For various UMP options, the store address and TCP port, and the source address and TCP port (to which receivers send delivery confirmations).

For UMQ, the Queue Name, which allows the receiver to then resolve the Queue in order to receive queued messages.

4.3.1.2. Receivers Query

Receivers can discover transport information in the following ways.

Search advertisements collected in the resolver cache maintained by the UM context.

Listen for source advertisements on the resolver_multicast_address:port.

Send a topic query (TQR).

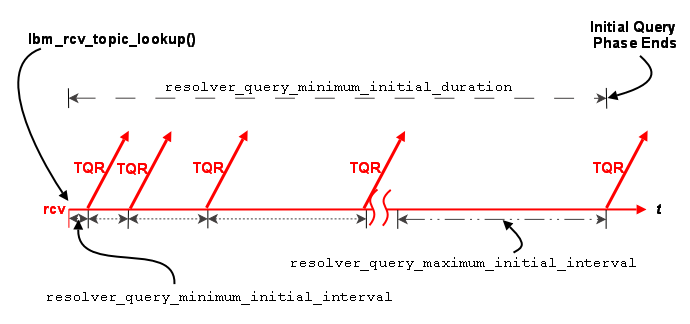

A new receiver queries for its topic according to the resolver_query_*_interval configuration options for the Initial and Sustaining Phases.

Note: The resolver_query_minimum_initial_interval actually begins after you call lbm_rcv_topic_lookup() prior to creating the receiver. If you have disabled the Initial Phase for the topic's resolution, the resolver_query_sustaining_interval begins after you call lbm_rcv_topic_lookup().

A Topic Query Record (TQR) consists primarily of the topic string. Receivers continue querying on a topic until they discover the number of sources configured by resolution_number_of_sources_query_threshold. However the large default of this configuration option (10,000,000) allows a receiver to continue to query until both the initial and sustaining phase of topic resolution complete.

4.3.1.3. Wildcard Receivers

Wildcard receivers can discover transport information in the following ways.

Search advertisements collected in the resolver cache maintained by the UM context.

Listen for source advertisements on the resolver_multicast_address:port.

Send a wildcard receiver topic query (WC-TQR).

UM implements only one phase of wildcard receiver queries, sending wildcard receiver queries according to wildcard receiver resolver_query_*_interval configuration options until the topic pattern has been queried for the resolver_query_minimum_duration. The wildcard receiver topic query (WC-TQR) contains the topic pattern and the pattern_type.

4.3.2. Topic Resolution Phases

The phases of topic resolution pertain to individual topics. Therefore if your system has 100 topics, 100 different topic resolution advertisement and query phases may be running concurrently. This describes the three phases of Ultra Messaging topic resolution.

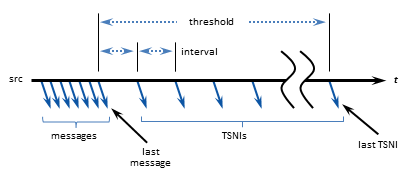

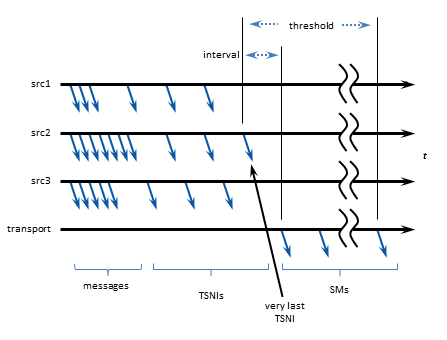

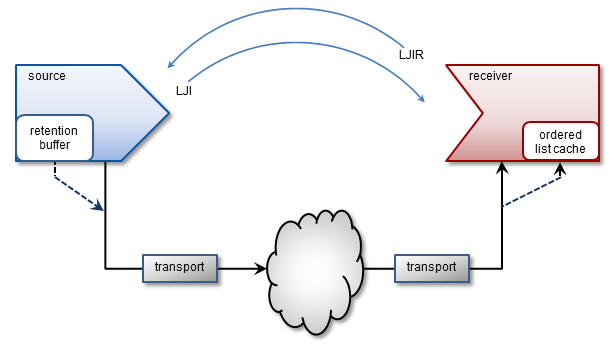

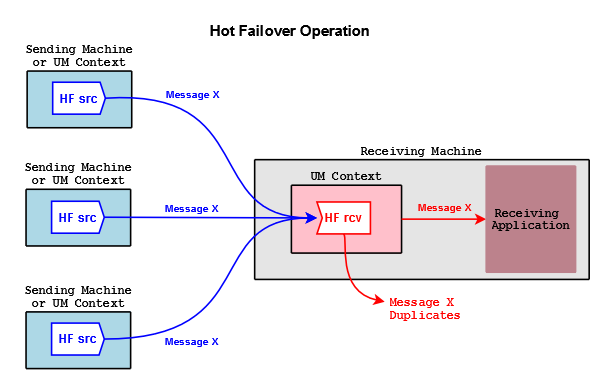

4.3.2.1. Initial Phase