Basic DRO Operation <-

The DRO's routing algorithm is said to be "interest-based". That is, subscribers express interest in topic names and/or wildcard topic patterns. The DRO network maintains lists of topics and patterns for each TRD, and routes messages accordingly.

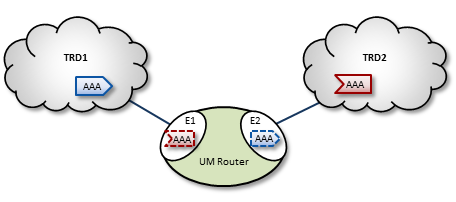

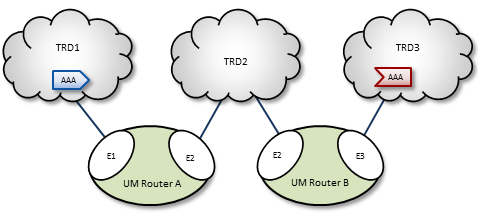

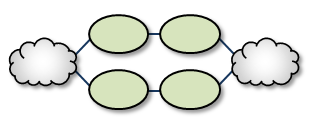

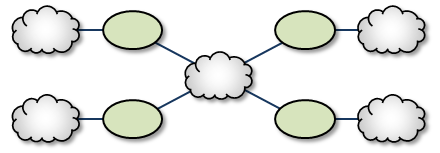

The diagram below shows a DRO bridging topic resolution domains TRD1 and TRD2, for topic AAA, in a direct link configuration. Endpoint E1 contains a proxy receiver for topic AAA and endpoint E2 has a proxy source for topic AAA.

To establish topic resolution in an already-running DRO, the following sequence typically occurs in an example like the above figure.

- A receiver in TRD2 issues a TQR (Topic Query Record) for topic AAA.

- Portal E2 receives the TQR and passes information about topic AAA to all other portals in the DRO. (In this case, E1 is the only other portal.)

- E1 immediately responds with three actions: a) create a proxy receiver for topic AAA, b) the new proxy receiver sends a TQR for AAA into TRD1, and c) E1 issues a Topic Interest message into TRD1 for the benefit of any other DROs that may be connected to that domain.

- A source for topic AAA in TRD1 sees the TQR and issues a TIR (Topic Information Record).

- E2 creates proxy source AAA, which then issues a TIR to TRD2. The receiver in TRD2 joins the transport, thus completing topic resolution.

- E1's AAA proxy receiver sees the TIR and requests that E2 (and any other interested portals in the DRO, if there were any) create a proxy source for AAA.

Interest and Topic Resolution <-

As mentioned in Basic DRO Operation, the DRO's routing algorithm is "interest-based". The DRO uses UM's Topic Resolution (TR) protocol to discover and maintain the interest tables.

For TCP-based TR, the SRS informs DROs of receiver topics and wildcard receiver patterns.

For UDP-based TR, the application's TR queries are used to inform DROs of its receiver topics and wildcard receiver patterns.

- Attention

- If using UDP-based TR, do not disable querying, as that would prevent the DRO from discovering topic and pattern interest.

Interest and Use Queries <-

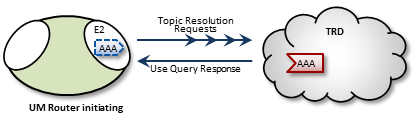

When a DRO starts, its endpoint portals issue a brief series of Topic Resolution Request messages to their respective topic resolution domains. This provokes quiescent receivers (and wildcard receivers) into sending Use Query Responses, indicating interest in various topics. Each portal then records this interest.

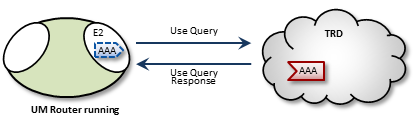

After a DRO has been running, endpoint portals issue periodic Topic Use Queries and Pattern Use Queries (collectively referred to as simply Use Queries). Use Query Responses from UM contexts confirm that the receivers for these topics indeed still exist, thus maintaining these topics on the interest list. Autonomous TQRs also refresh interest and have the effect of suppressing the generation of Use Queries.

In the case of multi-hop DRO configurations, DROs cannot detect interest for remote contexts via Use Queries or TQRs. They do this instead via Interest Messages. An endpoint portal generates periodic interest messages, which are picked up by adjacent DROs (i.e., the next hop over), at which time interest is refreshed.

You can adjust intervals, limits, and durations for these topic resolution and interest mechanisms via DRO configuration options (see DRO Configuration Reference).

DRO Keepalive <-

To maintain a reliable connection, peer portals exchange DRO Keepalive signals. Keepalive intervals and connection timeouts are configurable on a per-portal basis. You can also set the DRO to send keepalives only when traffic is idle, which is the default condition. When both traffic and keepalives go silent at a portal ingress, the portal considers the connection lost and disconnects the TCP link. After the disconnect, the portal tries to reconnect. See <gateway-keepalive>.

Final Advertisements <-

DRO proxy sources on endpoint portals, when deleted, send out a series of final advertisements. A final advertisement tells any receivers, including proxy receivers on other DROs, that the particular source has gone away. This triggers EOS and clean-up activities on the receiver relative to that specific source, which causes the receiver to begin querying according to its topic resolution configuration for the sustaining phase of querying.

In short, final advertisements announce earlier detection of a source that has gone away, instead of transport timeout. This causes a faster transition to an alternative proxy source on a different DRO if there is a change in the routing path.

More About Proxy Sources and Receivers <-

The domain-id is used by Interest Messages and other internal and DRO-to-DRO traffic to ensure forwarding of all messages (payload and topic resolution) to the correct recipients. This also has the effect of not creating proxy sources/receivers where they are not needed. Thus, DROs create proxy sources and receivers based solely on receiver interest.

If more than one source sends on a given topic, the receiving portal's single proxy receiver for that topic receives all messages sent on that topic. The sending portal, however creates a proxy source for every source sending on the topic. The DRO maintains a table of proxy sources, each keyed by an Originating Transport ID (OTID), enabling the proxy receiver to forward each message to the correct proxy source. An OTID uniquely identifies a source's transport session, and is included in topic advertisements.

Protocol Conversion <-

When an application creates a source, it is configured to use one of the UM transport types. When a DRO is deployed, the proxy sources are also configured to use one of the UM transport types. Although users often use the same transport type for sources and proxy sources, this is not necessary. When different transport types are configured for source and proxy source, the DRO is performing a protocol conversion.

When this is done, it is very important to configure the transports to use the same maximum datagram size. If you don't, the DRO can drop messages which cannot be recovered through normal means. For example, a source in Topic Resolution Domain 1 might be configured for TCP, which has a default maximum datagram size of 65536. If a DRO's remote portal is configured to create LBT-RU proxy sources, that has a default maximum datagram size of 8192. If the source sends a user message of 10K, the TCP source will send it as a single fragment. The DRO will receive it and will attempt to forward it on an LBT-RU proxy source, but the 10K fragment is too large for LBT-RU's maximum datagram size, so the message will be dropped.

See Message Fragmentation and Reassembly.

The solution is to override the default maximum datagram sizes to be the same. Informatica generally does not recommend configuring UDP-based transports for datagram sizes above 8K, so it is advisable to set the maximum datagram sizes of all transport types to 8192, like this:

context transport_tcp_datagram_max_size 8192 context transport_lbtrm_datagram_max_size 8192 context transport_lbtru_datagram_max_size 8192 context transport_lbtipc_datagram_max_size 8192 source transport_lbtsmx_datagram_max_size 8192

Note that users of a kernel bypass network driver (e.g. Solarflare's Onload) frequently want to avoid all IP fragmentation, and therefore want to set their datagram max sizes to an MTU. See Datagram Max Size and Network MTU and Dynamic Fragmentation Reduction.

Configuration options: transport_tcp_datagram_max_size (context), transport_lbtrm_datagram_max_size (context), transport_lbtru_datagram_max_size (context), transport_lbtipc_datagram_max_size (context), and transport_lbtsmx_datagram_max_size (source).

Final note: the resolver_datagram_max_size (context) option also needs to be made the same in all instances of UM, including DROs.

Multi-Hop Forwarding <-

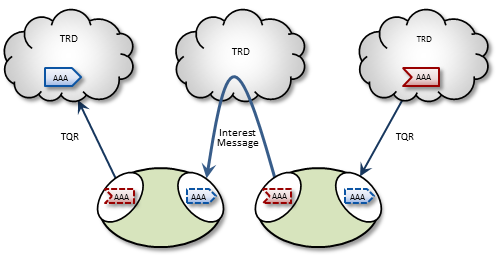

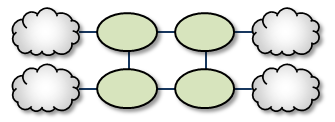

UM can resolve topics across a span of multiple DROs. Consider a simple example DRO deployment, as shown in the following figure.

In this diagram, DRO A has two endpoint portals connected to topic resolution domains TRD1 and TRD2. DRO B also has two endpoint portals, which bridge TRD2 and TRD3. Endpoint portal names reflect the topic resolution domain to which they connect. For example, DRO A endpoint E2 interfaces TRD2.

TRD1 has a source for topic AAA, and TRD3, an AAA receiver. The following sequence of events enables the forwarding of topic messages from source AAA to receiver AAA.

- Receiver AAA queries (issues a TQR).

- DRO B, endpoint E3 (B-E3) receives the TQR and passes information about topic AAA to all other portals in the DRO. In this case, B-E2 is the only other portal.

- In response, B-E2 creates a proxy receiver for AAA and sends a Topic Interest message for AAA into TRD2. The proxy receiver also issues a TQR, which in this case is ignored.

- DRO A, endpoint E2 (A-E2) receives this Topic Interest message and passes information about topic AAA to all other portals in the DRO. In this case, A-E1 is the only other portal.

- In response, A-E1 creates a proxy receiver for AAA and sends a Topic Interest message and TQR for AAA into TRD1.

- Source AAA responds to the TQR by sending a TIR for topic AAA. In this case, the Topic Interest message is ignored.

- The AAA proxy receiver created by A-E1 receives this TIR and requests that all DRO A portals with an interest in topic AAA create a proxy source for AAA.

- In response, A-E2 creates a proxy source, which sends a TIR for topic AAA via TRD2.

- The AAA proxy receiver at B-E2 receives this TIR and requests that all DRO B portals with an interest in topic AAA create a proxy source for AAA.

- In response, B-E3 creates a proxy source, which sends a TIR for topic AAA via TRD3. The receiver in TRD3 joins the transport.

- Topic AAA has now been resolved across both DROs, which forward all topic messages sent by source AAA to receiver AAA.

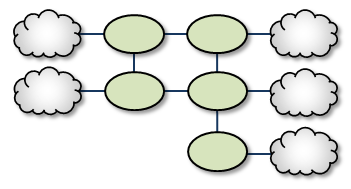

Routing Wildcard Receivers <-

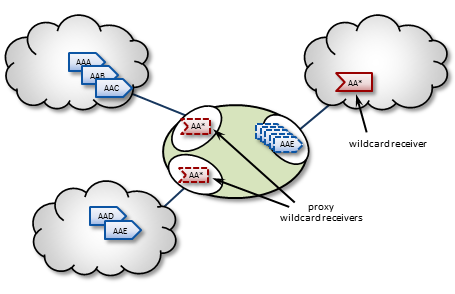

The DRO supports topic resolution for wildcard receivers in a manner very similar to non-wildcard receivers. Wildcard receivers in a TRD issuing a WC-TQR cause corresponding proxy wildcard receivers to be created in portals, as shown in the following figure. The DRO creates a single proxy source for pattern match.

Forwarding Costs <-

Forwarding a message through a DRO incurs a cost in terms of latency, network bandwidth, and CPU utilization on the DRO machine (which may in turn affect the latency of other forwarded messages). Transiting multiple DROs adds even more cumulative latency to a message. Other DRO-related factors such as portal buffering, network bandwidth, switches, etc., can also add latency.

Factors other than latency contribute to the cost of forwarding a message. Consider a message that can be sent from one domain to its destination domain over one of two paths. A three-hop path over 1Gbps links may be faster than a single-hop path over a 100Mbps link. Further, it may be the case that the 100Mbps link is more expensive or less reliable.

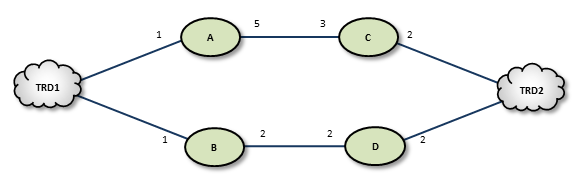

You assign forwarding cost values on a per-portal basis. When summed over a path, these values determine the cost of that entire path. A network of DROs uses forwarding cost as the criterion for determining the best path over which to resolve a topic.

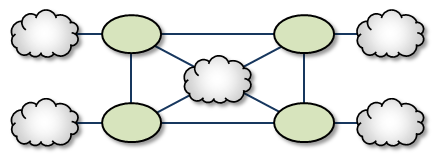

DRO Routing <-

DROs have an awareness of other DROs in their network and how they are linked. Thus, they each maintain a topology map, which is periodically confirmed and updated. This map also includes forwarding cost information.

Using this information, the DROs can cooperate during topic resolution to determine the best (lowest cost) path over which to resolve a topic or to route control information. They do this by totaling the costs of all portals along each candidate route, then comparing the totals.

For example, the following figure shows two possible paths from TRD1 to TRD2: A-C (total route cost of 11) and B-D (total route cost of 7). In this case, the DROs select path B-D.

If a DRO or link along path B-D should fail, the DROs detect this and reroute over path A-C. Similarly, if an administrator revises cost values along path B-D to exceed a total of 12, the DROs reroute to A-C.

If the DROs find more than one path with the same lowest total cost value, i.e., a "tie", they select the path based on a node-ID selection algorithm. Since administrators do not have access to node IDs, this will appear to be a pseudo-random selection.

- Note

- You cannot configure parallel paths (such as for load balancing or Hot failover), as the DROs always select the lowest-cost path and only the lowest-cost path for all data between two points. However, you can devise an exception to this rule by configuring the destinations to be in different TRDs. For example, you can create an HFX Receiver bridging two receivers in different TRD contexts. The DROs route to both TRDs, and the HFX Receiver merges to a single stream for the application.

DRO Hotlinks <-

The DRO "hotlink" feature is intended for large UM deployments where multiple datacenters are interconnected by two independent global networks. The function of DRO Hotlinks is to implement a form of Hot Failover (HF) whereby two copies of each message are sent in parallel over the two global networks from a publishing datacenter to subscribing datacenters. The subscribing process will normally receive both copies of each message, but UM will deliver first one it receives, and filter the second.

The purpose for this is to provide high availability in the face of failure of the global network. It is unlikely that both global networks will fail at the same time, so if one does fail, the messages flowing over the other network will provide connectivity without the need to perform an explicit "fail over" operation (which can introduce packet loss and latency).

Hotlinks: Logical Interpretation <-

Hotlinks operate on a Topic Resolution Domain ("TRD") basis.

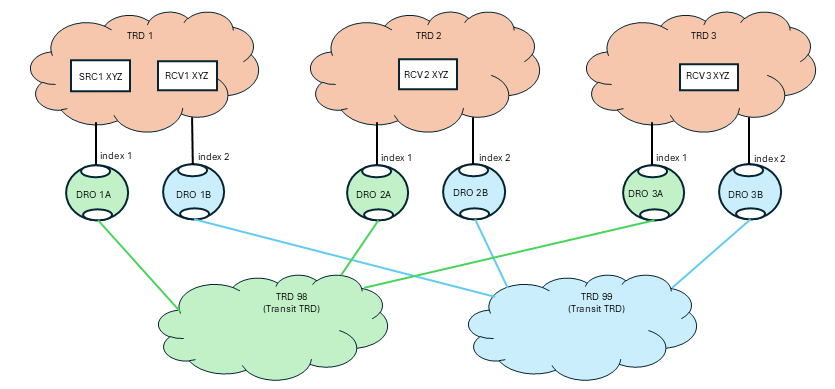

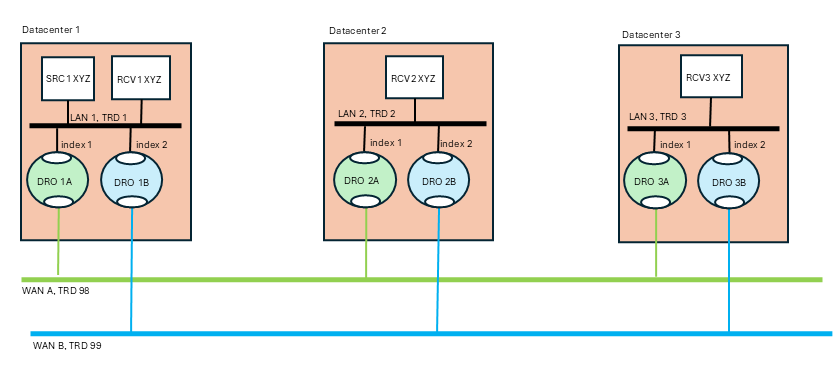

The primary job of the DRO is to connect TRDs together. In the above diagram, messages for topic "XYZ" published by SRC1 are received by RCV1, RCV2, and RCV3.

Let's consider RCV3. There are two possible paths to get from SRC to RCV3: transiting through TRD 98 and transiting through TRD 99. If this were a normal (not hotlinked) DRO deployment, UM would determine which path has the lowest cost and would route all messages through that path, not using the other path at all. With the hotlinks feature enabled, both DRO 1A and DRO 1B will create proxy receivers for topic XYZ and both will forward every message across the corresponding transit TRD to the destination. Once in the destination TRD 3, both copies of each message is received by the subscribing application RCV3, and UM will deliver the first one that arrives and discard the second.

To enable the hotlinks feature, you must:

- Define a hotlink index on the DRO portal used by the datacenter applications (not the redundant WAN links).

- Configure the source and receiver for "use_hotlink".

Hotlinks: Physical Interpretation <-

Normally there is no expectation of mapping between TRDs and the physical entities (networks, datacenters, hosts). The distribution of programs to TRDs is logical, not physical. However, the hotlinks feature deviates from that pattern with the expectation that TRDs map onto specific physical entities. Here is the previous logical TRD network shown in its physical embodiment:

The above diagram is a "dual hub with spokes" topology where the two WAN-based TRDs are the hubs an the data centers are the spokes. It is assumed that each data center's LAN uses LBT-RM (multicast) data transports, although this is not strictly necessary.

The publisher for topic "XYZ" (SRC1) is in datacenter 1. It sends a single message via multicast onto LAN 1. The subscriber "RCV1" will receive a copy of the message, as will the two DROs labeled "1A" and "1B". Those DROs will forward the message onto "WAN A" and "WAN B" respectively. Now you have two copies of the message. Let's follow the message into datacenter 2 via DROs "2A" and "2B". Each DRO receives its respective copy of each message and forwards it onto LAN 2. Note there are still two copies of the message on LAN 2. Finally receiver "RCV2" gets both copies of the message, and UM's "hot failover" logic delivers the first one to the application and discards the second one.

Note that the WAN TRDs can also use multicast, or can be configured for unicast-only operation. In fact, even the LANs can be used in unicast mode, although that will force the publisher "SRC1" to send the message three times, to RCV1, DRO 1A, and DRO 1B.

The benefit of hotlinks is that if WAN A fails, the receivers for XYZ will not detect any disruption or latency outliers - WAN B will continue carrying the messages. There is no "fail over" sequence. A downside of this design is that the receivers will experience twice the packet load. However, also note that the second copy of each message is discarded inside UM, so no application overhead is consumed.

The hotlinks feature is intended to be used in deployments similar to the above diagram, with DROs interconnecting multiple datacenters. It is not designed to handle redundancy within a datacenter.

Mixing Regular and Hotlinked DROs <-

It is possible to extend the basic hub-and-spoke topology with spurs. For example, if there are two small offices near TRD 2 where the size does not justify full redundant global connectivity, TRD 2 could service those offices as TRDs 200 and 201 using non-hotlinked DROs.

There are two data flows of interest here:

- SRC200 is configured for "use_hotlink", even though TRD 200 is not hotlinked (i.e. does not have indices set). This includes the proper headers in the messages to allow hotlinked operation downstream. So as the messages flow into TRD2, they are not redundant. But they will exit TRD 2 via DRO 2A and DRO 2B, which are configured for hotlinks. So receivers in TRD 1 and TRD 3 will get have redundancy if one of the global WANs has a failure.

- SRC1 is configured for "use_hotlink", and RCV2 and RCV3 will get the full benefit of it. However, because of the way UM works, RCV200 and RCV201 will not benefit from the hotlinks. This is because DRO 200 and DRO 201 will not add the right information for RCV200 and RCV201 to join both data streams, so they will only join one. Maybe RCV200 joins the data stream sent via TRD 98 and maybe RCV201 joins the data stream sent via TRD 99 (it's not possible to predict which each will join). If the global network hosting TRD 98 fails, RCV200 will experience an outage until the DROs time out the flow, at which point RCV200 will join the other data stream. Note that RCV201 will not experience any interruption.

Note that you cannot add a redundant link between TRD2 and TRD200 to get hotlinks there also. See DRO Hotlink Restrictions item 5, "No hotlink chains".

Implementing DRO Hotlinks <-

Applications do not need special source code to make use of hotlinks. Contrast this with the Hot Failover (HF) feature that requires the use of special hot failover APIs. Hotlinks use standard source APIs (but see DRO Hotlink Restrictions), and is enabled through configuration.

DRO Hotlink Restrictions <-

-

No Hot Failover - Hotlinks is not compatible with regular Hot Failover (HF). They are intended for different use cases and may not be used in the same UM network.

-

No Smart Sources - Hotlinks is not supported by Smart Sources.

-

No XSP - Hotlinks is not supported by Transport Services Provider (XSP). We plan to add this support in a future release.

-

Locate Stores in same TRD as source - Hotlinks supports UM's Persistence feature. However, whereas a non-hotlinked DRO network allows Stores to be placed anywhere in the network, the hotlink feature adds the restriction that the Stores must be in the same TRD as the source. Note that Informatica considers this restriction to generally be the best practice except in certain limited use cases.

-

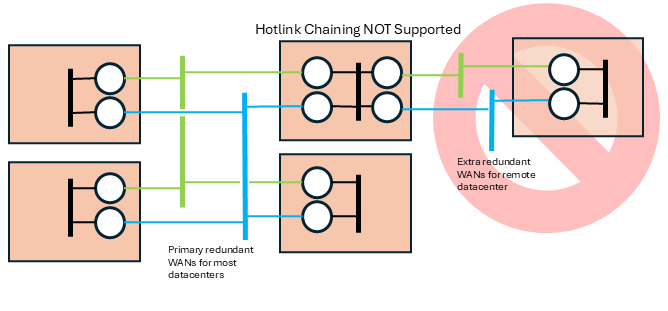

No hotlink chains - Informatica only supports a single central redundant pair of networks using the hotlinks feature. UM does not support multiple hotlinks hops (Contact UM Support for potential workarounds if this is necessary). For example, the this topology is not supported:

Routing Topologies <-

You can configure multiple DROs in a variety of topologies. Following are several examples.

Direct Link <-

The Direct Link configuration uses a single DRO to directly connect two TRDs. For a configuration example, see Direct Link Configuration.

Single Link <-

A Single Link configuration connects two TRDs using a DRO on each end of an intermediate link. The intermediate link can be a "peer" link, or a transit TRD. For configuration examples, see Peer Link Configuration and Transit TRD Link Configuration.

Parallel Links <-

Parallel Links offer multiple complete paths between two TRDs. However, UM will not load-balance messages across both links. Rather, parallel links are used for failover purposes. You can set preference between the links by setting the primary path for the lowest cost and standby paths at higher costs. For a configuration example, see Parallel Links Configuration.

Loops <-

Loops let you route packets back to the originating DRO without reusing any paths. Also, if any peer-peer links are interrupted, the looped DROs are able to find an alternate route between any two TRDs.

Loop and Spur <-

The Loop and Spur has a one or more DROs tangential to the loop and accessible only through a single DRO participating in the loop. For a configuration example, see Loop and Spur Configuration.

Loop with Centralized TRD <-

Adding a TRD to the center of a loop enhances its rerouting capabilities.

Star with centralized TRD <-

A Star with a centralized TRD does not offer rerouting capabilities but does provide an economical way to join multiple disparate TRDs.

Star with Centralized DRO <-

The Star with a centralized DRO is the simplest way to bridge multiple TRDs. For a configuration example, see Star Configuration.

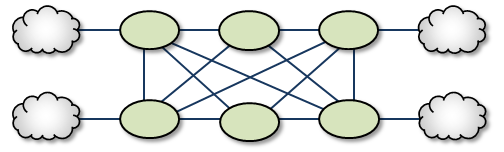

Mesh <-

The Mesh topology provides peer portal interconnects between many DROs, approaching an all-connected-to-all configuration. This provides multiple possible paths between any two TRDs in the mesh. Note that this diagram is illustrative of the ways the DROs may be interconnected, and not necessarily a practical or recommended application. For a configuration example, see Mesh Configuration.

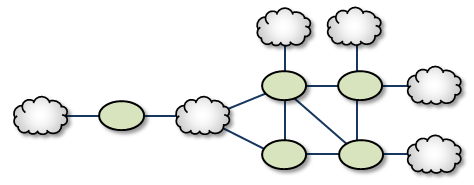

Palm Tree <-

The Palm Tree has a set of series-connected TRDs fanning out to a more richly meshed set of TRDs. This topology tends to pass more concentrated traffic over common links for part of its transit while supporting a loop, star, or mesh near its terminus.

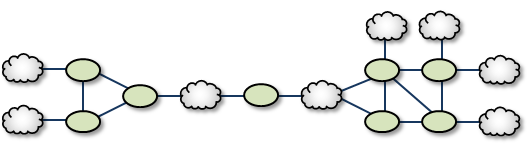

Dumbbell <-

Similar to the Palm Tree, the Dumbbell has a funneled route with a loop, star, or mesh topology on each end.

Unsupported Configurations <-

When designing DRO networks, do not use any of the following topology constructs.

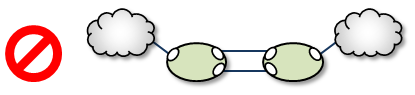

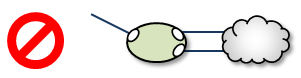

Two peer-to-peer connections between the same two DROs:

Two endpoint connections from the same DRO to the same TRD:

Assigning two different Domain ID values (from different DROs) to the same TRD:

UM Feature Compatibility <-

You must install the UM Dynamic Routing Option with its companion Ultra Messaging UMS, UMP, or UMQ product, and versions must match. While most UM features are compatible with the DRO, some are not. Following is a table of features and their compatibilities with the DRO.

| UM Feature | DRO Compatible? | Notes |

|---|---|---|

| Connect and Disconnect Source Events | Yes, but see Source Connect and Disconnect Events | |

| Hot Failover (HF) | Yes | The DRO can pass messages sent by HF publishers to HF receivers, however the DRO itself cannot be configured to originate or terminate HF data streams. |

| Hot Failover Across Multiple Contexts (HFX) | Yes | |

| Late Join | Yes | |

| Message Batching | Yes | |

| Monitoring/Statistics | Yes | |

| Multicast Immediate Messaging (MIM) | Yes | |

| Off-Transport Recovery (OTR) | Yes | |

| Ordered Delivery | Yes | |

| Pre-Defined Messages (PDM) | Yes | |

| Request/Response | Yes | |

| Self Describing Messaging (SDM) | Yes | |

| Smart Sources | Partial | The DRO does not support proxy sources sending data via Smart Sources. The DRO does accept ingress traffic to proxy receivers sent by Smart Sources. |

| Source Side Filtering | Yes | The DRO supports transport source side filtering. You can activate this either at the originating TRD source, or at a downstream proxy source. |

| Source String | Yes, but see Source Strings in a Routed Network | |

| Transport Acceleration | Yes | |

| Transport LBT-IPC | Yes | |

| Transport LBT-RM | Yes | |

| Transport LBT-RU | Yes | |

| Transport LBT-SMX | Partial | The DRO does not support proxy sources sending data via LBT-SMX. Any proxy sources configured for LBT-SMX will be converted to TCP, with a log message warning of the transport change. The DRO does accept LBT-SMX ingress traffic to proxy receivers. |

| Transport TCP | Yes | |

| Transport Services Provider (XSP) | No | |

| JMS, via UMQ broker | No | |

| Spectrum | Yes | The DRO supports UM Spectrum traffic, but you cannot implement Spectrum channels in DRO proxy sources or receivers. |

| UMP Implicit and Explicit Acknowledgments | Yes | |

| UMP Persistent Store | Yes | |

| UMP Persistence Proxy Sources | Yes | |

| UMP Quorum/Consensus Store Failover | Yes | |

| UMP Managing RegIDs with Session IDs | Yes | |

| UMP RPP: Receiver-Paced Persistence (RPP) | Yes | |

| UMQ Brokered Queuing | No | |

| UMQ Ultra Load Balancing (ULB) | No | |

| Ultra Messaging Desktop Services (UMDS) | Not for client connectivity to the UMDS server | |

| Ultra Messaging Manager (UMM) | Yes | Not for DRO management |

| UM SNMP Agent | No | |

| UMCache | No | |

| UM Wildcard Receivers | Yes | |

| Zero Object Delivery (ZOD) | Yes |